.jpg)

Modern data integration connects structured, unstructured, and streaming sources—far beyond slow, manual bulk uploads. In 2025, 93% of IT leaders say streaming platforms are key to meeting data goals. Cloud-native systems, real-time apps, and hybrid architectures demand scalable, reliable integration. You’ll use modern data integration, data pipeline optimization, and real-time architecture to break down silos, speed access, and improve decision‑making.

This guide walks you through the architecture, tools, best practices, ROI impact, and common hurdles of modern data integration. It’s built for teams modernizing stacks or scaling analytics with hybrid and streaming systems.

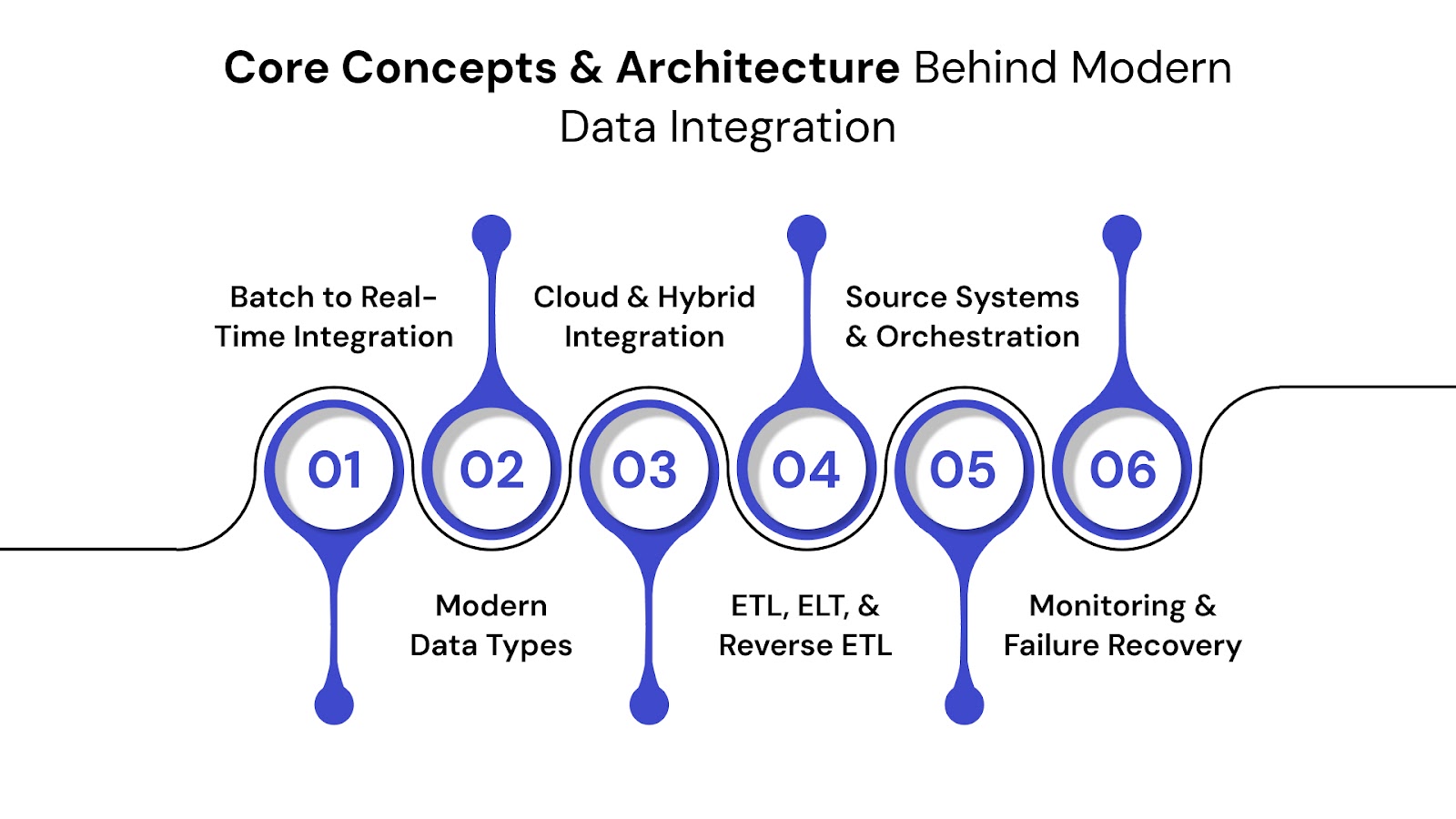

Modern data integration isn’t just about connecting systems—it’s about ensuring data flows continuously, cleanly, and contextually across cloud, on-prem, and hybrid environments. This section explains the core components, data types, and architecture models that power modern pipelines.

Data movement has shifted from nightly batch loads to real-time or near-real-time pipelines. Modern systems process data as it’s generated, reducing latency and enabling faster insights. Event-driven architecture and trigger-based workflows have replaced static, time-based jobs.

Streaming pipelines are now used in sectors like finance (real-time fraud detection), e-commerce (instant inventory updates), and IoT (live sensor analytics). Tools like Apache Kafka and Apache Flink are central to this shift, enabling data to move continuously and reactively through systems.

Integration platforms must handle three categories: structured, unstructured, and streaming data. Structured data includes relational tables from CRMs or ERPs. Unstructured data comes from logs, emails, or social media. Streaming data flows from sensors, APIs, or clickstreams.

Supporting all three ensures complete visibility across operations. For example, syncing CRM tables (structured), analyzing server logs (unstructured), and reacting to live sensor data (streaming) demands distinct processing and storage strategies—such as columnar warehouses for structured and object stores for unstructured formats.

Curious about the hurdles behind smooth data flow—and how top teams solve them?

Don’t miss this deep dive into key ingestion challenges, proven practices, and what’s next for data teams.

Each environment has unique integration demands. Cloud platforms need API-driven, scalable connections. On-premise setups may rely on file-based exchange or legacy interfaces. Hybrid is now dominant, driven by cloud adoption alongside existing infrastructure.

Common hybrid use cases include syncing on-prem ERP data with a cloud-based data lake or sending SaaS app events to a local warehouse. Solutions like Fivetran, Talend, and Boomi support such mixed architectures out-of-the-box.

ETL (Extract, Transform, Load) pulls data, processes it, and then loads it into a destination—ideal for legacy or on-prem pipelines. ELT (Extract, Load, Transform) defers transformation to modern cloud warehouses like Snowflake or BigQuery, where compute is scalable and cheap. Reverse ETL moves cleaned data from warehouses back into business apps (like CRMs or support tools) for operational use.

ELT is rising due to cloud-native design and reduced transformation bottlenecks. Reverse ETL tools like Hightouch and Census enable marketing and support teams to use analytics data in real time.

Modern integration supports diverse source systems: REST APIs, SQL/NoSQL databases, CSV/Parquet files, and event queues. Connector frameworks like Airbyte and Meltano offer prebuilt integrations and extensibility.

Orchestration is key for managing dependencies and execution logic. Tools like Apache Airflow and Prefect use DAGs (Directed Acyclic Graphs) to control task flow, retries, and scheduling, ensuring pipelines run reliably and on time.

Observability ensures that data pipelines work as expected and meet SLAs. Key metrics include data volume processed, job latency, schema mismatches, and failure rates. Monitoring tools like Monte Carlo and Datadog provide visibility into pipeline health.

Alerting on job failures or delays enables faster resolution. Some platforms offer self-healing capabilities—replaying failed runs or switching to fallback data sources automatically, reducing manual intervention and downtime.

Modern data integration isn’t just about tools—it’s about making consistent, scalable, and auditable decisions across your pipelines. The following practices help ensure your workflows are stable, future-proof, and aligned with operational goals.

Tool selection should align with your technical and business requirements. Key factors include data volume, expected latency, supported data sources, and cost structure. Real-time systems need low-latency support and event streaming, while batch-heavy pipelines may prioritize connector breadth or transformation capabilities.

Match tools to architecture: use Kafka or Flink for streaming, Airbyte or Fivetran for SaaS-to-warehouse sync, and Talend for complex on-prem pipelines. Always run a proof-of-concept using a checklist—test volume handling, schema evolution, logging, and connector stability before rollout.

Want to explore the tools that power seamless data flow across your systems? Check out this guide on the top ingestion solutions driving smarter, faster data pipelines.

Without format consistency, integration workflows break or require constant rework. Schema drift—unexpected changes in structure or field definitions—can silently corrupt data or crash pipelines. A robust schema strategy is essential.

Use standardized formats like JSON for flexibility, Parquet for analytical performance, and Avro when schema evolution is expected. Implement versioning for schemas and maintain backward compatibility using tools like Schema Registry. Validate schemas as part of pipeline checkpoints to reduce propagation of errors.

Manual validation doesn’t scale. Automate checks for null values, type mismatches, range violations, duplication, and schema conformance. These rules should be applied both at the ingestion stage and before data is written to final destinations.

Use frameworks like Great Expectations or dbt tests for column-level checks, or write custom validation scripts tied into orchestration tools like Apache Airflow. Set clear pass/fail rules and route failed data to quarantines or fallback queues.

Access management and governance ensure data flows are secure, auditable, and compliant. Role-based access control (RBAC) limits data exposure, while data lineage tools help trace issues and maintain accountability.

Use Identity and Access Management (IAM) systems built into platforms like AWS or GCP. For pipeline-level policies, implement controls using tools like Monte Carlo or governance layers in Collibra. Maintain logs for data access, transformations, and job executions to support audits and ensure policy adherence.

Even with advanced tools, modern data integration brings challenges that can degrade performance, quality, and maintainability. This section outlines the most common issues teams face and how to resolve them with proven practices and tooling.

Schema drift happens when incoming data changes format without notice—such as an API introducing a new field or changing data types. These changes can break transformations or silently corrupt downstream analytics.

Use a Schema Registry to enforce validation. Schema-on-read tools like BigQuery or Spark reduce risk by interpreting structure at runtime. Set up automated alerts for mismatches and decouple schema enforcement from transformation logic to isolate failures and simplify recovery.

Real-time pipelines often lag due to network congestion, single-threaded processing, or unoptimized transformation logic. These delays can cause outdated dashboards, missed triggers, or failed SLAs.

Improve throughput using parallel execution, buffering mechanisms, and data windowing strategies. Tools like Apache Flink or Kafka Streams are built for low-latency workloads. Monitor performance using latency and throughput metrics, and set SLA thresholds to trigger adaptive workflow responses or load balancing.

Looking to streamline and scale your integration workflows? Explore this guide to the top data pipeline tools of 2025—built to handle volume, complexity, and speed with ease.

Overusing tools creates fragmented pipelines, complex troubleshooting, and high operational overhead. Teams often add tools to solve narrow problems without a broader system view.

Choose platforms that support multiple use cases, such as Airbyte or Talend, to reduce overhead. Document all pipeline flows and maintain team-wide standards for configuration, error handling, and logging. Review tool usage quarterly to identify what can be consolidated or retired.

When done well, data integration delivers speed, stability, and measurable business value. This section captures the practical benefits and long-term returns of a modern, well-run integration system.

Clean, integrated pipelines give analysts and data teams real-time access to accurate information. Dashboards update faster, and models train on consistent data, improving decision quality and delivery time.

Before: teams pulled Excel files from multiple systems and merged them manually. After: a cloud data warehouse auto-ingests from live APIs and SaaS tools. This shift cuts reporting time and boosts analyst productivity.

Manual ingestion processes often lead to duplication, missing values, and version mismatches. Automation replaces repetitive work, standardizes pipelines, and builds trust in reporting.

Teams in marketing and finance benefit from this directly—reducing dependency on IT teams for reports and enabling self-service analytics. Built-in validation layers also catch errors before they enter core systems.

Modern integration platforms scale horizontally, handling more data without proportional increases in cost or complexity. As your volume grows, unit cost per record drops, and infrastructure remains manageable.

Total cost of ownership falls due to less custom scripting, fewer manual fixes, and more efficient compute. You also save developer time by reusing components and reducing maintenance cycles.

Building solid pipelines starts with the right foundation. Explore the core principles, stages, and best practices every data engineer should know to design reliable integration workflows.

You should reassess your integration strategy when data volume spikes, latency issues emerge, or your tech stack evolves. These trigger points often surface as increased error rates or delayed dashboards.

Use a checklist: Is your schema still compatible? Are SLAs being met? Is a majority of the logic now happening outside the pipeline? Run internal audits every 6–12 months to stay ahead of failures and bottlenecks.

Build pipelines as modular blocks that can be reused, extended, or replaced. Avoid tightly coupled workflows that make changes hard to implement. Design with new APIs, formats, and streaming workloads in mind.

Maintain clear documentation, use parameterized configurations, and isolate transformation logic from source-specific code. This structure lets you scale faster and integrate new data without breaking what's already working.

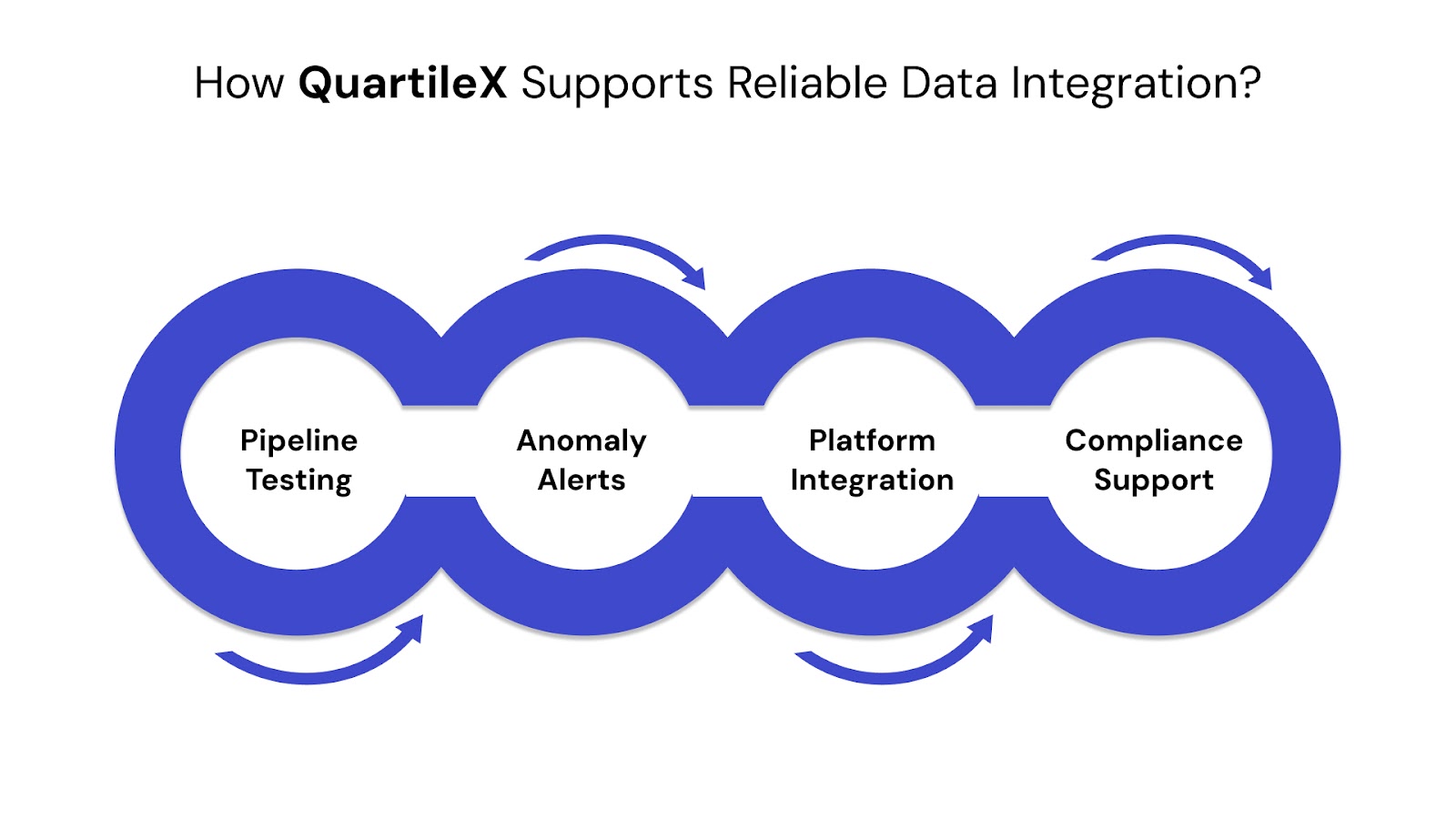

Modern data integration doesn’t end with simply connecting systems—it requires continuous assurance that the data flowing through those systems is accurate, consistent, and trusted. QuartileX complements your existing integration stack by adding a layer of intelligent testing, validation, and monitoring across your data pipelines.

Key capabilities include:

QuartileX ensures your integrated data is not just connected, but trusted, accurate, and ready for high-impact use across analytics, AI, and business intelligence workflows.

As data volumes grow and architectures become more complex, modern data integration has become a cornerstone of digital success. By combining cloud-native platforms, automation, and real-time processing, today’s integration solutions empower organizations to unlock fast, accurate insights across the business.

Yet, even the most advanced tools require validation and ongoing monitoring to ensure data reliability. This is where intelligent testing and governance capabilities make a measurable difference.

Whether you're streamlining existing pipelines or building a scalable data foundation from the ground up, investing in robust integration practices will drive better decisions, higher efficiency, and future-ready operations.

Looking to improve the reliability and performance of your data integration efforts?

Talk to the experts at QuartileX to explore how our intelligent testing solutions can help you validate, monitor, and scale your pipelines—confidently and efficiently.

A: Modern data integration connects structured, unstructured, and streaming data in real time using scalable, cloud-native tools. Unlike traditional batch processing, it enables continuous data flow across diverse sources. This reduces latency, manual effort, and integration failures.

A: Popular tools include Fivetran, Airbyte, Apache Kafka, Talend, and Apache Airflow. Each supports specific use cases—such as real-time ingestion, orchestration, or connector management. Tool choice depends on latency needs, volume, and system architecture.

A: Common challenges include schema drift, latency in real-time pipelines, and overuse of disconnected tools. These lead to data quality issues and operational complexity. Addressing them requires automation, standardization, and platform consolidation.

A: Schema changes—like a new field in an API—can break pipelines or corrupt data silently. Schema registries, versioning, and validation checkpoints prevent these failures. They help maintain consistency and trust across systems.

A: It reduces manual effort, speeds up reporting, and supports self-service analytics. Integration platforms also scale with data volume, keeping infrastructure and developer costs under control. The result is faster insights and more efficient operations.

From cloud to AI — we’ll help build the right roadmap.

Kickstart your journey with intelligent data, AI-driven strategies!