.png)

According to IDC, global data volume is expected to reach 175 zettabytes in 2025. For companies aiming to scale, this surge comes from sales platforms, mobile apps, IoT sensors, marketing tools, and internal systems. The challenge today is not just collecting data. It is about storing it efficiently and making it usable across teams for faster, smarter decisions.

The data lake vs data warehouse question becomes important in this context. Both are designed for large-scale data storage, yet they serve different needs. A data lake stores raw and unstructured data without applying any predefined format. A data warehouse stores cleaned, structured data that is ready for reporting and decision-making.

This guide explores how each one works, where they fit in your data architecture, and how to decide whether you need one or both.

TL;DR — Key Takeaways

If you're trying to decide which approach fits your team’s data needs (or your long-term strategy), understanding the difference between the data lake vs data warehouse is a critical first step.

A data lake is a centralized storage system that holds raw data in its original form. It can store structured data from databases, semi-structured data like JSON files, and unstructured formats such as videos, PDFs, logs, and images. There is no need to define a schema when storing the data.

The adoption of data lakes continues to grow. The global data lake market is projected to reach $29.9 billion by 2027, driven by the demand for flexible, scalable storage that supports modern analytics, AI, and IoT use cases.

Unlike traditional storage systems that require predefined formats, data lakes let you collect everything first and decide how to use it later. This makes them useful for teams that need to explore, experiment, and scale quickly.

To understand how this works in real-world use, let’s look at how data lakes operate.

A data lake follows a simple principle: store now, structure later. It acts as a landing zone for all types of raw data coming from various sources. No formatting or schema is required at the time of storage.

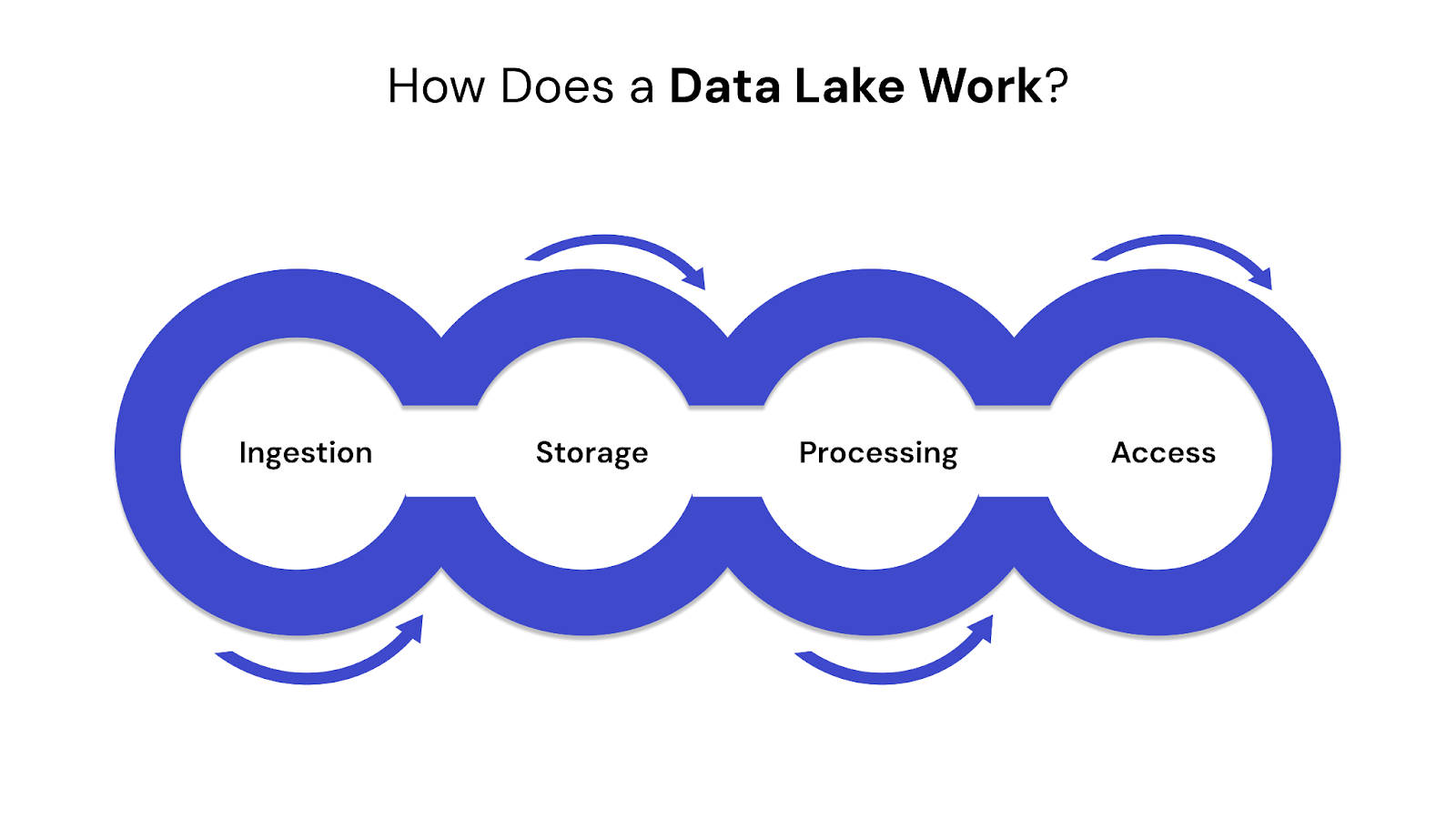

Here’s how the process works:

This approach makes it easier to scale, experiment, and support multiple data types without heavy upfront design.

Data lakes offer flexibility and scale but also require careful management. Here are the core strengths and challenges:

Pros

Cons

Data lakes depend on cloud-native and open-source tools that support flexible data management. Commonly used technologies include:

These tools help organize raw data, manage pipelines, enforce access controls, and maintain data quality across teams.

A data lake gives your organization the ability to store everything in one place without worrying about how it will be used upfront. This supports a range of use cases, from research and modeling to long-term archiving, and also helps in data preparation for storage.

For example, a ride-sharing platform might:

This setup supports innovation without slowing down business teams, making it easier to adapt to future data needs.

Example Scenario:

A ride-hailing app stores trip logs, location pings, and driver feedback in a lake. Data scientists use this data to improve ETA predictions and match rates, without needing IT to pre-process the data.

Next, let’s shift to the structured side of the data spectrum: the data warehouse.

A data warehouse is a centralized system designed to store structured data for fast querying, reporting, and analysis. Unlike a data lake, it requires data to be cleaned, validated, and organized before being stored.

The global data warehousing market is projected to reach $51 billion by 2028, reflecting its critical role in business intelligence, executive reporting, and strategic planning.

Data warehouses are optimized for accuracy and performance. They follow strict data modeling rules to ensure the information is consistent and ready for immediate use by analysts and decision-makers.

Let’s explore how data warehouses operate in practice.

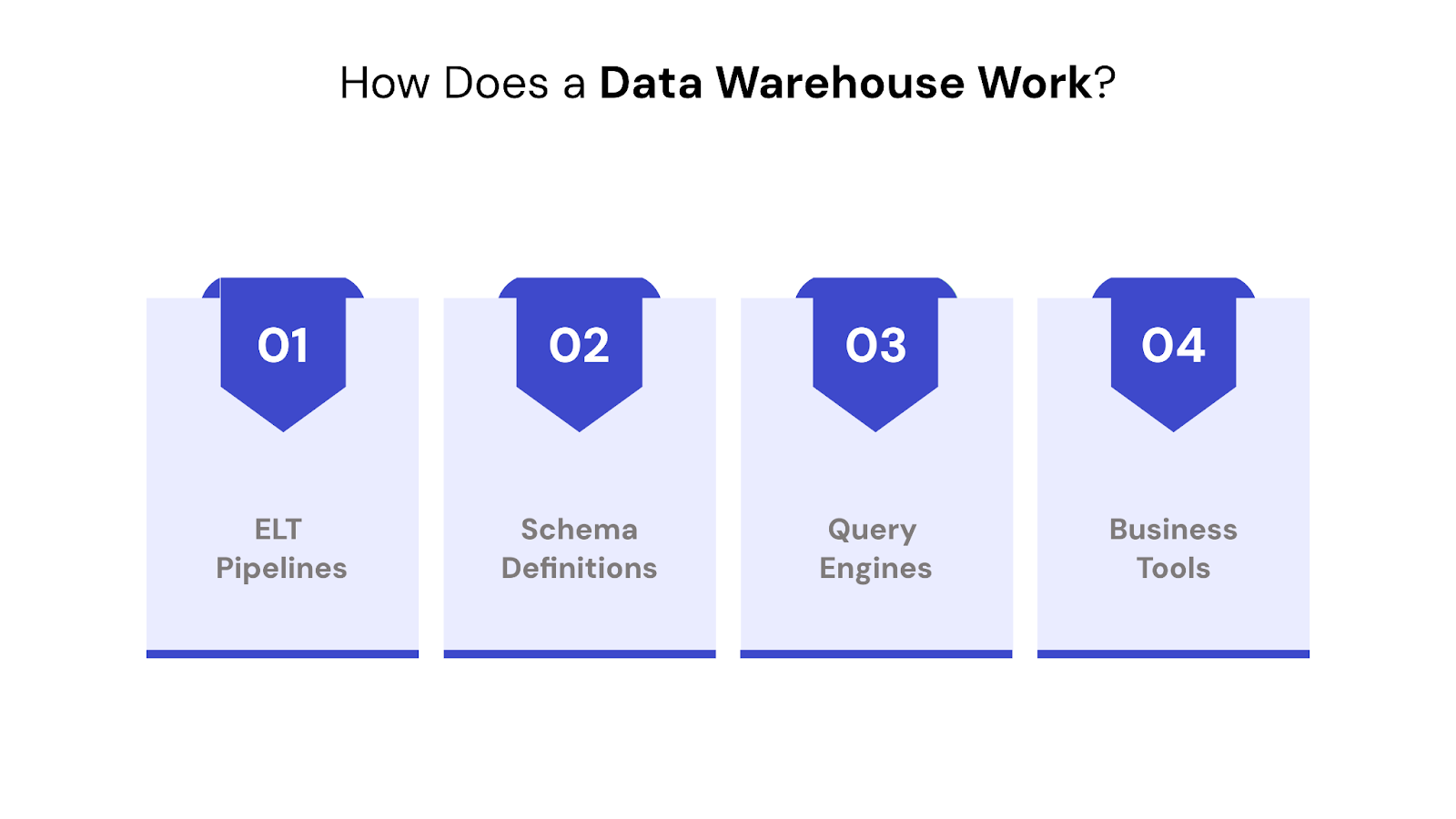

A data warehouse uses a schema-on-write model. Data is transformed during the loading process, which means formatting and validation happen before the data is stored.

Here’s how the process works:

For example, a retail company might store daily sales, inventory levels, and customer transactions in the warehouse. Business teams use tools like Power BI or Tableau to track performance by region or product category.

This predefined structure ensures data quality and makes it easy to compare results over time.

While data warehouses offer strong performance and reliability, they are best suited for specific types of data and use cases.

Pros

Cons

Modern data warehouses depend on scalable infrastructure and well-integrated tools. Common technologies include:

These platforms help ensure that pipelines are efficient, data is accurate, and users can access trusted information when needed.

For teams that need consistent and reliable data to support business decisions, a data warehouse is the foundation for structured reporting.

It enables:

Example Scenario:

A finance team loads monthly revenue, expenses, and forecasts into a warehouse. Dashboards update in real-time, helping leadership make budget adjustments quickly.

To better understand how to build a reliable data foundation using lakes and warehouses, explore our guide on Steps and Essentials to Prepare Data for AI.

Now that you’ve seen how each works individually, let’s compare both data lake vs data warehouse side by side.

Not all data storage needs follow the same path. Some teams need to explore raw, diverse datasets, while others need fast, reliable reports. Understanding how data lakes and data warehouses differ helps you build the right foundation for analytics, machine learning, or business reporting.

Below is a side-by-side comparison to help you evaluate which solution aligns better with your data strategy:

Looking to improve how you bring data into your system? Explore the guide on Data Ingestion Framework: Key Components and Process Flows to build a solid foundation for your data pipeline.

Understanding the differences is just one part. Now, let’s look at how they actually work together in real-world data systems.

Modern data systems rarely rely on just one type of storage. Instead, businesses often combine both data lakes and data warehouses to get the best of flexibility and performance. Understanding how they work together can help you build a stronger, more scalable data strategy.

Here’s how they typically collaborate within a unified pipeline:

1. Raw Data Lands in the Data Lake

The process begins by collecting all types of raw data into the data lake. This includes structured data from systems, unstructured files like images or PDFs, and semi-structured logs from web and app sources.

2. Data Is Processed Within the Lake

Cleaning, deduplication, and transformation are done inside the lake using processing engines like Apache Spark or AWS Glue. This prepares the data for downstream use without immediately moving it.

3. Curated Data Moves to the Data Warehouse

Once the data is structured and refined, selected parts are loaded into the data warehouse. These are the datasets needed for fast queries, dashboards, and business reports.

4. Each Serves Different Users

The data lake supports engineers and data scientists working on models, predictions, or experiments. The warehouse serves analysts and business leaders who need consistent and fast access to performance data.

5. Workflows Are Automated

Tools like Apache Airflow, Azure Data Factory, or dbt are used to schedule and monitor the flow between systems. This keeps data fresh and reduces manual effort.

Want to connect your lake and warehouse effectively? Explore how to build a complete data pipeline for efficient analytics and reporting.

Your decision should reflect the type of data you manage, the skills of your team, and how your business uses that data. Consider these scenarios to see which solution meets your current and future needs.

Choosing between a data lake and a data warehouse depends on the type of data you handle, your team’s skills, and how your organization uses data to make decisions. Both are important for a strong data foundation, but they serve different purposes and suit different workflows.

Here’s how to know which one fits your needs best:

Example:

A smart home company collects raw data from thousands of devices every second. This unstructured data goes into a data lake where engineers later use it to build energy-saving models.

Example:

A retail chain processes daily sales data, cleans it, and loads it into a warehouse. Store managers and executives use dashboards to track performance by location in real time.

You can learn more about secure transitions and compliance in our Cloud Data Security Guide.

Yes, and many companies successfully combine both systems. Airbnb is a strong example. It uses a data lake to store raw data from user interactions, search logs, bookings, and app activity. This gives data scientists the freedom to explore trends, build predictive models, and test new features.

At the same time, Airbnb maintains a data warehouse that holds cleaned and structured data. Business teams rely on it for consistent reporting, tracking KPIs such as revenue, occupancy rates, and guest satisfaction.

By using both, Airbnb balances flexibility and performance. The data lake supports large-scale exploration, while the warehouse ensures fast, trusted insights for decision-making, this is how data drives business growth.

To close, let’s recap why this decision matters.

Knowing the difference between a data lake and a data warehouse is a key step in building a scalable, efficient data strategy. These two systems serve different functions but work best when used together.

A data lake offers the flexibility to store raw, diverse data from multiple sources, making it ideal for advanced analytics, experimentation, and machine learning. A data warehouse provides structure and speed for clean, reliable reporting and decision-making.

When used together, they help organizations:

This balanced approach is essential for businesses navigating complex data challenges and preparing for future growth.

Build with Confidence with QuartileX

At QuartileX, we help you turn raw, fragmented data into a trusted, analytics-ready foundation. Our team brings deep expertise in building modern data architectures that fit your business goals.

Here’s how we support your data journey:

Whether you are starting fresh or scaling an existing setup, QuartileX helps you move with clarity and confidence.

Explore our Data Engineering Services and Partner with QuartileX to start building a future-ready data ecosystem.

A. Not entirely. A data lake offers flexible storage for raw and varied data, but it lacks the structure and speed needed for business reporting. A data warehouse delivers faster queries, consistent metrics, and cleaner data. Most organizations use both, depending on the purpose.

A. A data lakehouse is a hybrid system that combines the low-cost, large-scale storage of a data lake with the structured performance of a warehouse. It allows teams to run analytics and reporting directly on raw or semi-structured data, reducing the need for multiple tools.

A. It depends on your goals and complexity. A basic data lake can be deployed in a few weeks, especially with cloud storage. A data warehouse may take several months due to data modeling, pipeline development, testing, and governance setup.

A. Data warehouses are ideal for finance, healthcare, and other industries that rely on clean, structured reporting. Data lakes are better suited for companies in tech, IoT, media, and research that work with unstructured data or build machine learning models.

A. Data lakes require more attention to governance, metadata cataloging, and quality control to prevent disorganization. Data warehouses are easier to govern once set up but still need regular tuning, schema updates, and usage monitoring to stay efficient.

From cloud to AI — we’ll help build the right roadmap.

Kickstart your journey with intelligent data, AI-driven strategies!