Most businesses today rely on a growing network of databases to power applications, analytics, and day-to-day operations. As systems evolve and data volumes scale, older database setups often become inefficient or difficult to maintain. Migrating to a newer, more flexible environment—whether cloud-native or hybrid—is often not just beneficial, but necessary.

Database migration is the process of moving data, schemas, and configurations from one system to another. Done right, it doesn’t just move information—it improves performance, reduces technical debt, and opens the door for more modern infrastructure and analytics.

In this guide, we explain what database migration means, explore real-world examples, compare common migration strategies, outline how to plan and execute a smooth transition, and share practical best practices for reducing risks and ensuring long-term success.

TL;DR (Key Takeaways)

Database migration involves transferring data from one system (the source) to another (the destination), which could be on a different platform, architecture, or even location. This process includes not only the data itself, but also schema definitions, indexes, stored procedures, user permissions, and other supporting components.

It’s a technical and often business-critical operation—much more than simply exporting and importing files. A successful migration must ensure that the data remains consistent, secure, and fully functional in its new environment.

A healthcare provider running a legacy on-premises PostgreSQL database might migrate to a managed cloud service like Amazon RDS to improve reliability, reduce infrastructure overhead, and support modern analytics. The migration could involve re-architecting schema, handling patient data under strict compliance rules, and minimizing downtime for mission-critical applications.

Looking for a broader view of enterprise-scale data moves? You might find value in our detailed Data Migration Resources, Tools and Strategy: Ultimate Guide 2025.

Many organizations rely on legacy systems that were built years ago. These systems can become expensive to maintain, hard to scale, and incompatible with modern tools. Database migration helps address these limitations by moving to platforms that offer better performance, security, and flexibility.

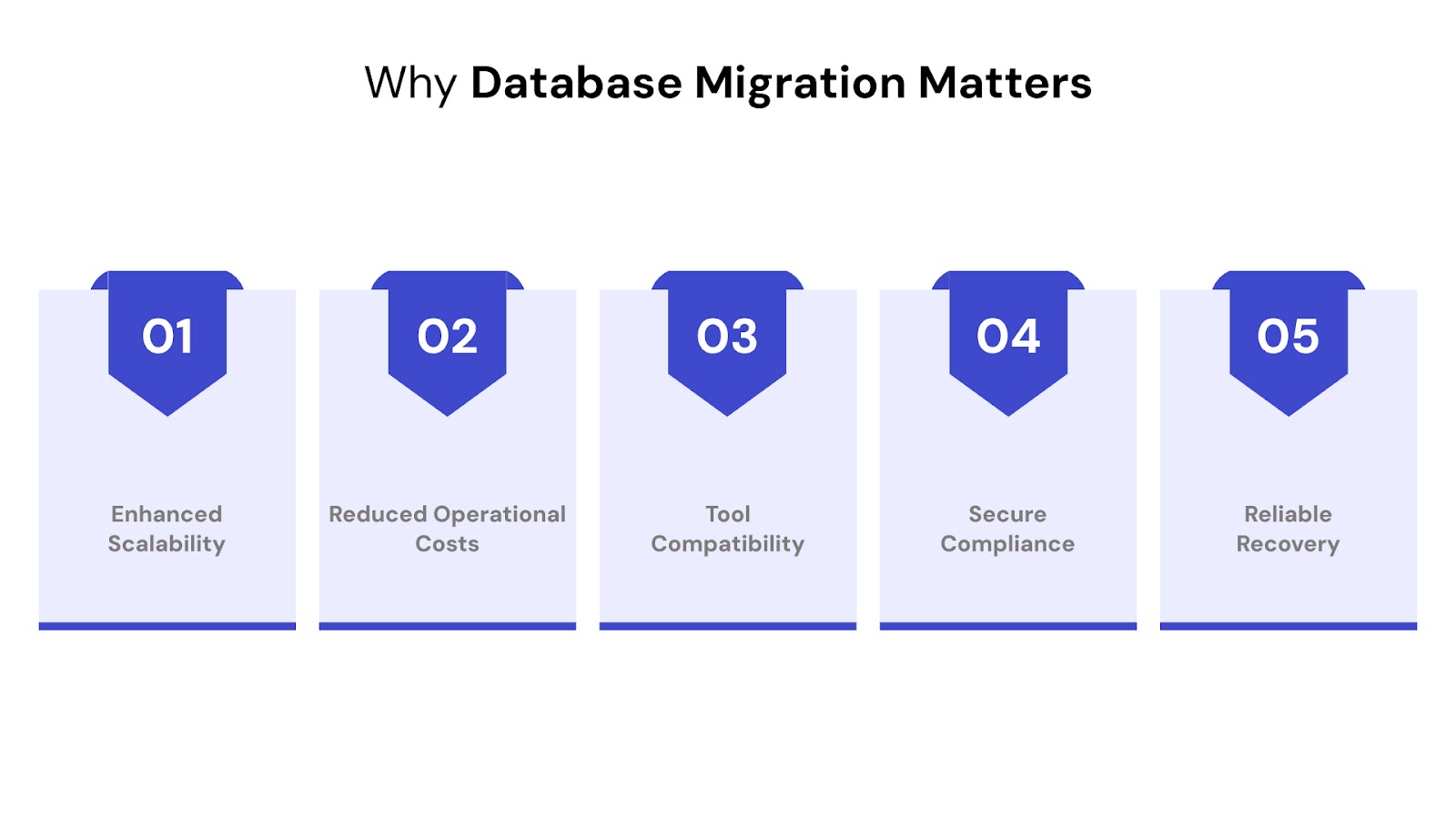

Here are a few reasons companies choose to migrate their databases:

Older databases may not handle large volumes of traffic or complex queries efficiently. Moving to newer systems — especially cloud-based platforms — allows for faster processing and on-demand scalability.

Legacy systems often come with high licensing and infrastructure costs. Cloud and open-source alternatives offer more cost-effective pricing models, helping reduce overall IT spending.

Modern platforms integrate more easily with data pipelines, analytics platforms, and machine learning tools. This makes it easier to extract value from your data and support evolving business needs.

Newer database systems include built-in tools for encryption, access controls, and compliance reporting, which are important for protecting sensitive information and meeting industry regulations.

Many cloud providers offer features like automated backups, regional replication, and failover options. These reduce downtime and support business continuity in case of a failure.

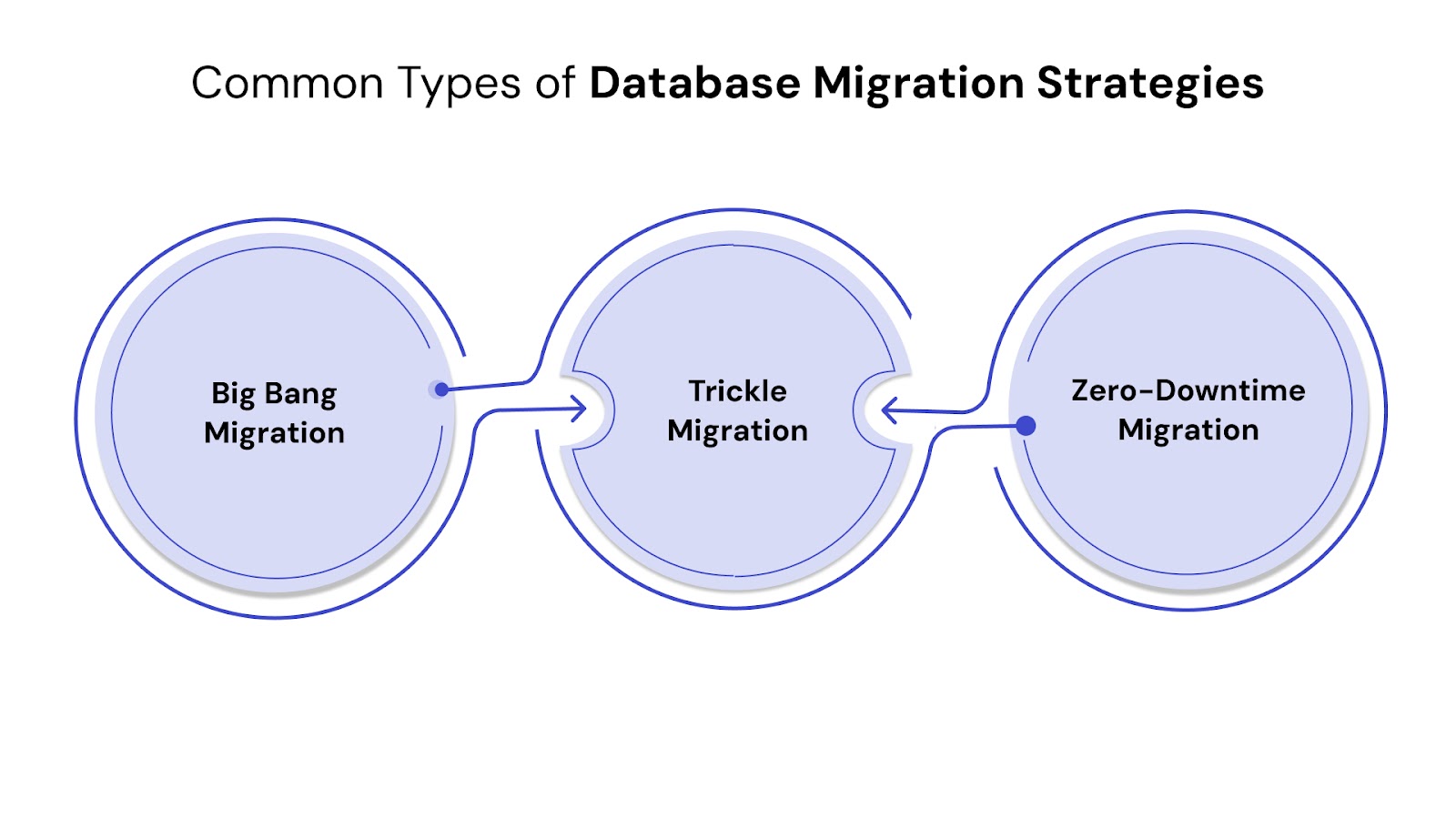

Not all database migrations follow the same path. The strategy you choose depends on your existing infrastructure, business requirements, and tolerance for downtime. Below are the three primary approaches:

In this method, all data is moved from the source to the target system in one go, typically during a scheduled downtime window.

Pros:

Cons:

Use Case: Suitable for small to mid-sized datasets or systems with low uptime requirements.

This approach breaks the migration into smaller steps, transferring data gradually while keeping both the source and target systems running in parallel.

Pros:

Cons:

Use Case: Best for large, mission-critical systems where uninterrupted access is necessary.

With this method, replication tools are used to sync changes from the source database to the target in real time. A cutover happens only after both systems are fully aligned.

Pros:

Cons:

Use Case: Critical applications where even brief downtime is unacceptable.

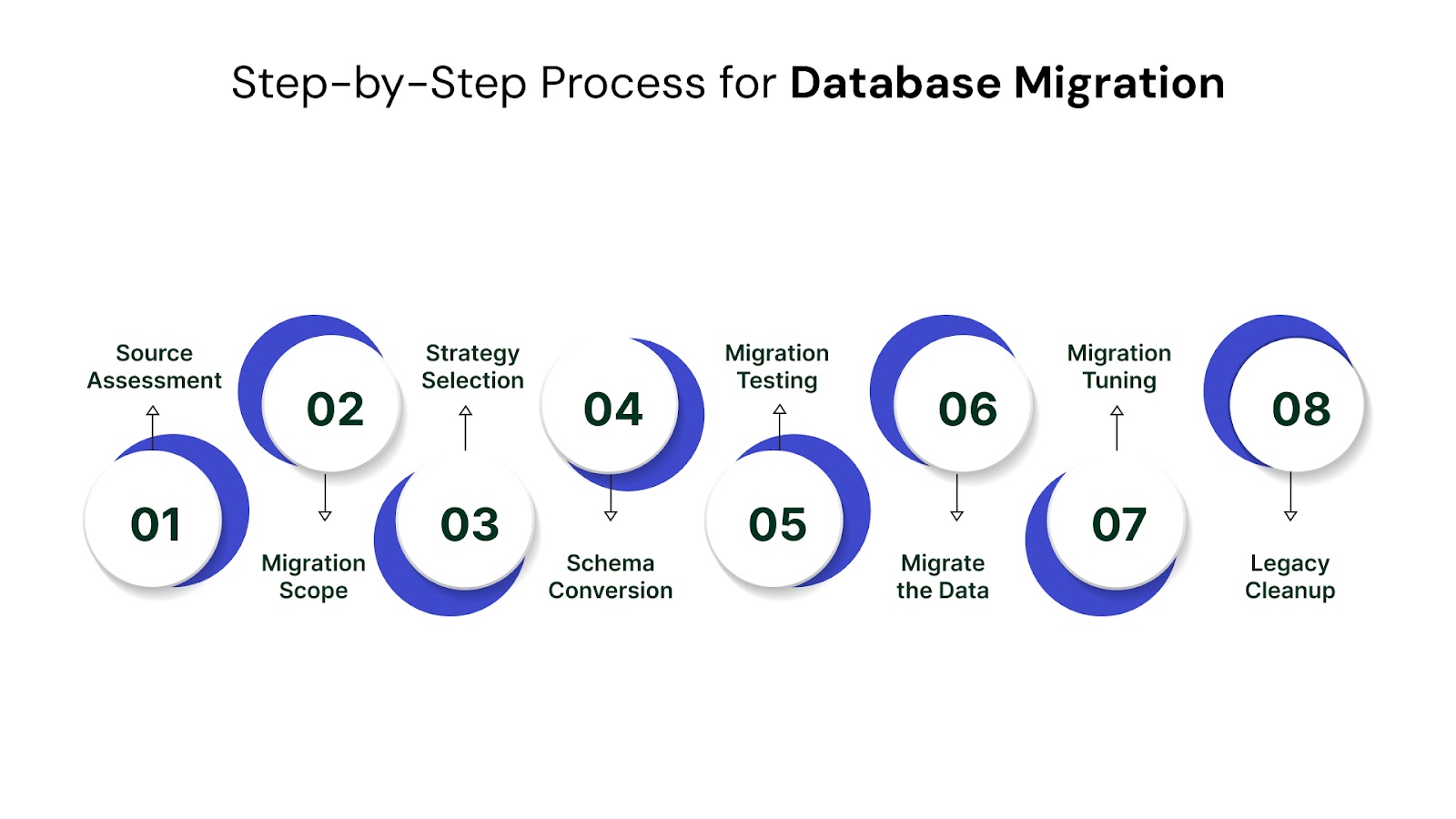

A successful database migration isn't about moving data as quickly as possible — it's about moving it correctly, securely, and in a way that supports your long-term business goals. Below is a practical framework many organizations follow:

Start by understanding the architecture, schema complexity, size of your dataset, and existing performance bottlenecks. Identify dependencies between applications and databases. This early discovery phase helps you plan for what can (or can’t) be migrated and sets the baseline for effort and cost.

Outline what needs to be migrated: schema, stored procedures, functions, triggers, indexes, and actual data. Decide on target database technologies (e.g., SQL Server to PostgreSQL or Oracle to MySQL) and whether you're doing a homogeneous or heterogeneous migration.

Based on the complexity and business needs, pick from Big Bang, Trickle, or Zero-Downtime (as discussed above). Align your choice with the acceptable level of downtime, business continuity expectations, and testing capabilities.

If you're switching database engines (heterogeneous migration), schema conversion will be essential. Many tools like AWS Schema Conversion Tool or Oracle SQL Developer help automate this, but manual validation is still necessary for compatibility and performance.

Before touching production, run test migrations in a sandbox. Validate schema structure, data integrity, and application compatibility. Focus on performance benchmarking, indexing behavior, and query optimization in the new environment.

Perform the actual data transfer using ETL tools, database-specific migration utilities, or change data capture (CDC) methods. Ensure audit logs are maintained, especially for compliance-sensitive environments.

After data transfer, validate accuracy using checksums or reconciliation scripts. Review indexing strategies, query plans, and application performance. Monitor logs for anomalies and confirm business processes are functioning as expected.

Once confident in the new system’s stability and performance, decommission legacy databases. Ensure backups and rollback plans are archived in accordance with your organization’s data governance policies.

Even with careful planning, database migrations often run into hurdles — especially when dealing with legacy systems, large data volumes, or real-time business operations. Here are the most common challenges you should anticipate:

One of the most significant risks is partial or corrupted data during transfer. This often stems from poorly written scripts, unhandled null values, encoding mismatches, or incorrect type casting between source and target databases.

Tip: Always run end-to-end data validation and backup the source database before beginning the migration.

Extended downtime during migration affects business continuity. Even a few hours of unplanned outage can result in lost revenue and poor customer experience — especially for real-time platforms. To reduce this risk, many organizations are exploring Zero-Downtime Migration techniques.

Migrated databases may initially experience degraded performance due to poor indexing, missing execution plans, or incompatible query structures in the new environment.

Proper testing and tuning after migration are critical, as discussed in our post on Guide to Testing Data Pipelines: Tools, Approaches and Essential Steps.

Failing to encrypt sensitive data during transit or not adhering to region-specific data regulations (like GDPR or HIPAA) can result in major compliance issues. Ensure all data movement is secure, logged, and validated.

You can read more about secure transitions and compliance in our Cloud Data Security Guide.

Older systems often have undocumented dependencies or hardcoded values that break when ported. Applications may also rely on specific SQL dialects or stored procedures that behave differently in the target database.

Documenting dependencies early in the planning phase and involving application teams can help avoid surprises later.

To reduce risk and maximize efficiency, successful database migration projects follow a structured approach rooted in tested best practices. Here’s what your team should prioritize:

Start by identifying the type of migration (homogeneous vs. heterogeneous), your data volume, application dependencies, acceptable downtime, and your end goals. Choose your strategy accordingly — whether Big Bang, Trickle, or Zero-Downtime.

For a deeper comparison of cloud migration approaches, check out our post on Choosing the Right Cloud Migration Strategy: Key Considerations.

Run a thorough assessment of your existing data before migrating. Eliminate duplicates, normalize formats, and fix corrupt or outdated records. Clean data is easier to move, validate, and manage post-migration.

Automated migration tools — such as AWS DMS, Azure Database Migration Service, or Fivetran — help reduce human error and accelerate the process. Use Change Data Capture (CDC) and schema conversion tools to replicate changes without halting live systems.

We also explore such automation tools and strategies in our Ultimate Guide to Data Engineering Tools in 2025.

Unit tests, integration tests, and performance benchmarks should be part of the migration process — not an afterthought. Always validate migrated data against source systems to confirm accuracy.

Prepare for the worst-case scenario by maintaining backups and ensuring your old system is intact until post-migration validation is complete. Even in a zero-downtime setup, rollback capabilities provide a crucial safety net.

As the volume, velocity, and variety of data increase, database migration strategies must evolve. Here are some key trends shaping how organizations modernize their data environments:

With businesses embracing hybrid and multi-cloud environments, migrations are now largely oriented toward platforms like AWS RDS, Google Cloud SQL, or Azure SQL Database. These platforms offer automated backups, scalability, and simplified monitoring — major incentives for companies looking to phase out legacy on-prem databases.

Modern tools like Flyway, Liquibase, Fivetran, and Hevo automate schema conversion, data syncing, and rollback procedures. These reduce manual overhead, minimize risk, and accelerate go-live timelines.

Organizations are increasingly looking to automate pipelines to cut delays in deployment and ensure consistent data delivery.

Advanced platforms are now using AI to assess data quality, predict failure risks, and recommend optimization strategies before execution. Machine learning algorithms also help detect anomalies during migration and can auto-correct certain issues in real-time.

Business continuity can’t take a backseat during migration. As a result, many enterprises now treat zero-downtime migration not as a luxury, but as a requirement — especially when migrating production workloads. Real-time replication tools and change data capture (CDC) pipelines help minimize disruption during cutover.

With increasing regulatory pressures (GDPR, HIPAA, etc.), migrations today require encrypted transfers, role-based access controls, audit trails, and tokenized data movement. Businesses are embedding compliance into their migration blueprints right from the start.

For more on maintaining secure architecture during cloud migration, see our guide: Cloud Data Security Made Simple.

Database migration is not a one-size-fits-all operation. Whether you’re moving from legacy systems to cloud-native platforms, or consolidating fragmented databases into a unified data environment, the stakes are high — but so are the rewards. The key lies in selecting the right migration strategy, preparing thoroughly, validating continuously, and leveraging modern tools to streamline the process.

At QuartileX, we specialize in helping organizations minimize risk and maximize performance during migrations. Our approach is built around:

We've helped enterprises across industries transition critical systems without interrupting operations. If you're planning a high-stakes migration, we recommend exploring our companion piece on Minimising Data Loss During Database Migration to learn the tactical steps that ensure a smooth transition.

Need to Migrate Without the Mayhem?

From initial planning to post-migration optimization, our team of data engineers can guide you every step of the way. Whether it's a big bang cutover or a phased trickle migration, QuartileX ensures your data lands where it should — securely, efficiently, and intact.

Get in touch to start building a migration strategy tailored to your goals.

Database migration is the process of moving data from one database system to another. This can involve transferring schemas, tables, data, stored procedures, and more. Migrations are often undertaken to upgrade technology, improve performance, reduce costs, or align with cloud strategies.

The three common approaches are:

Challenges include data loss, corruption, schema mismatches, extended downtime, compliance risks, and disruption to ongoing operations. Choosing the right strategy and tools can help reduce these risks significantly.

Use encryption, access controls, and audit logs throughout the migration process. Validate every phase with testing and monitoring. Cloud-native security services and compliance checks also play a crucial role.

Popular tools include AWS Database Migration Service (DMS), Azure Database Migration Service, Flyway, Liquibase, and Fivetran. Each serves different scenarios — from homogeneous migrations to complex heterogeneous environments.

QuartileX offers customized migration solutions backed by deep experience in enterprise-scale transitions. We provide end-to-end support, automation-first pipelines, compliance-driven design, and minimal disruption to your business.

From cloud to AI — we’ll help build the right roadmap.

Kickstart your journey with intelligent data, AI-driven strategies!