Organizations across industries are increasingly data-centric. From real-time analytics to machine learning applications, data plays a central role in business innovation and decision-making. But behind the dashboards and models that fuel these insights are two crucial disciplines: data engineering and data science.

Although often lumped together, these fields serve distinct purposes. Data engineers build and maintain the data infrastructure—pipelines, storage systems, and integration workflows—while data scientists use that infrastructure to extract insights, forecast trends, and build models.

In this guide, we break down what each role entails and how it contributes to the data lifecycle, the core skill sets and technologies involved, the key differences and areas of overlap, and why collaboration between the two functions is critical for building scalable, reliable analytics capabilities.

TL;DR — Key Takeaways

If you're trying to decide which discipline suits your team’s needs (or your own career path), understanding the split between the two is a crucial first step.

Data engineering refers to the architecture, development, and maintenance of data systems that make information accessible, usable, and trustworthy. While it’s not always visible to end users, data engineering is the invisible scaffolding that supports everything from operational dashboards to AI algorithms.

At its core, it’s about building the infrastructure that collects, processes, stores, and moves data—whether it's batch ingestion from SaaS apps or streaming data from IoT devices.

These responsibilities ensure data is not only accessible but also consistent, timely, and trusted by downstream consumers.

Without a reliable data foundation, even the most advanced data science models will falter. Data engineering ensures that data is clean, reliable, and accessible—so analysts and scientists can focus on extracting value instead of fighting infrastructure issues.

For example, a data engineer might:

This allows teams across product, growth, and operations to make informed decisions using trusted metrics.

Data science is the discipline of using data to extract meaningful insights, predict future outcomes, and guide strategic decisions. While data engineering focuses on building infrastructure, data science applies statistical methods, machine learning algorithms, and analytical techniques to interpret data and answer complex questions.

In practical terms, data scientists turn raw data into actionable intelligence—whether it’s identifying customer churn patterns, forecasting sales, optimizing supply chains, or training recommender systems.

Data scientists must balance deep technical skills with strong business intuition—they’re expected to bridge the gap between numbers and narrative.

Data science enables organizations to go beyond historical reporting and into forecasting and optimization. A well-designed machine learning model can help predict customer churn, optimize logistics routes, or even personalize product recommendations in real time.

For instance, a data scientist might:

Without effective data science, companies risk underutilizing their data assets—relying on gut instinct over data-driven insights.

Though often grouped under the umbrella of "data roles," data science and data engineering serve fundamentally different purposes. While they work in tandem, each requires a unique set of skills, tools, and outcomes.

Let’s break down the distinctions clearly:

Think of data engineers as architects and plumbers who lay the pipelines and infrastructure. Data scientists are the analysts and strategists who consume that data to draw conclusions, build forecasts, or optimize processes.

Without the foundation built by data engineers, data scientists wouldn’t have clean, timely, or accessible data to work with. Conversely, without data scientists, data would be collected and stored — but rarely transformed into business value.

Let’s consider a retail enterprise implementing dynamic pricing:

While data science and data engineering are distinct disciplines, the magic truly happens when they work together. Collaboration ensures that insights are not only generated but also scalable, reproducible, and production-ready.

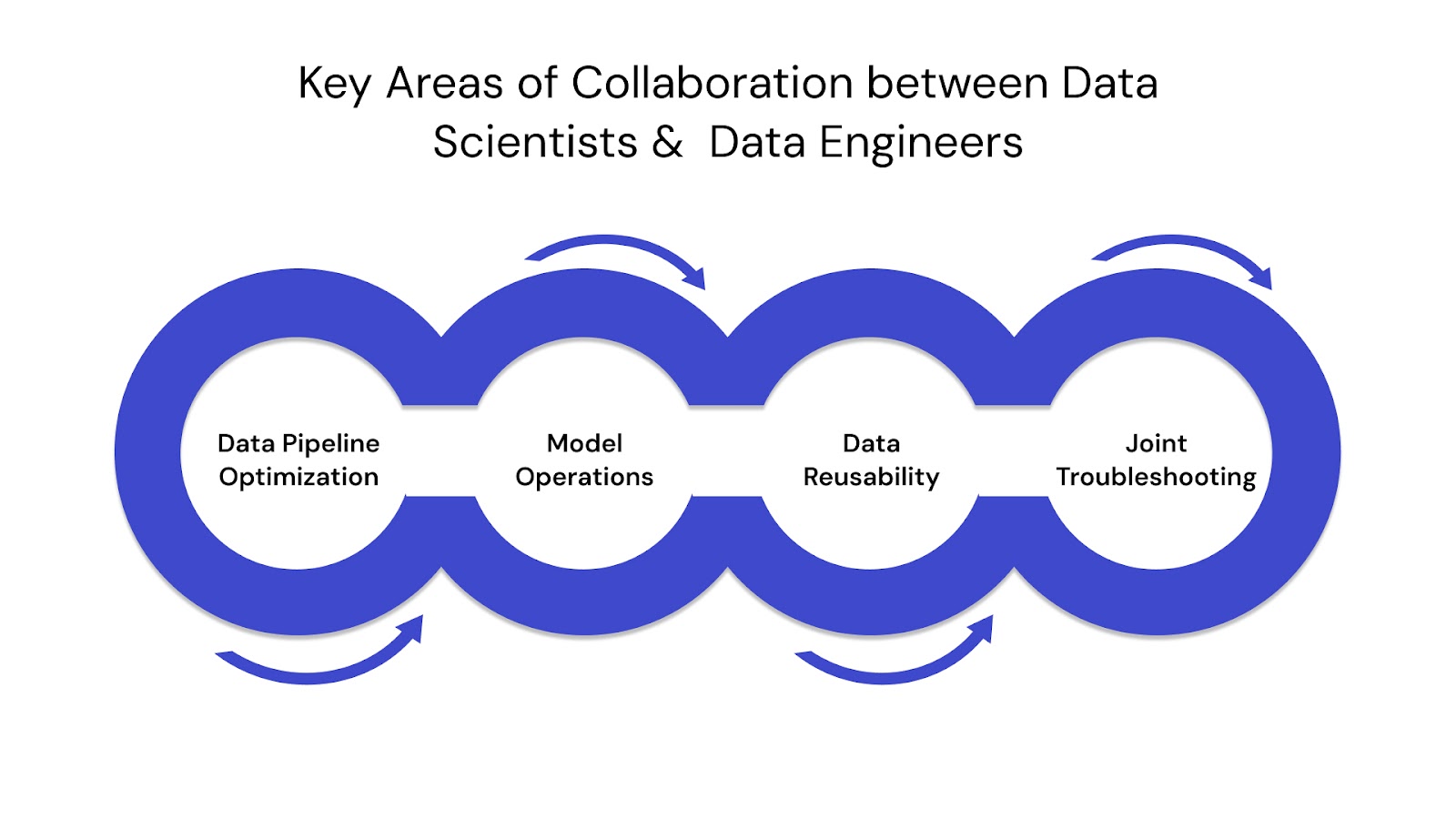

Key Areas of Collaboration:

Data scientists rely heavily on timely, clean data — and it’s the job of data engineers to make that possible. Engineers build and maintain automated pipelines that source, clean, and load data into accessible environments (like data warehouses or lakes).

Want to understand how modern data pipelines are designed? Here’s a guide on building and designing scalable data pipelines.

Once data scientists build a model, engineers help package and deploy it into production environments. This includes wrapping models into APIs, integrating with apps, or setting up real-time scoring systems.

To do this effectively, many organizations adopt MLOps best practices.

Curious about MLOps? Read how automation pipelines power reliable model operations.

Engineers and scientists often collaborate on shared data assets like feature stores, data marts, and standardized model inputs — increasing productivity and model performance across teams.

Whether it’s a failed pipeline or a skewed model result, both roles need to work closely to debug issues — especially in production environments where business impact is high.

Together, they close the loop from raw data to business-ready insights — enabling:

Related read: Steps and Essentials to Prepare Data for AI

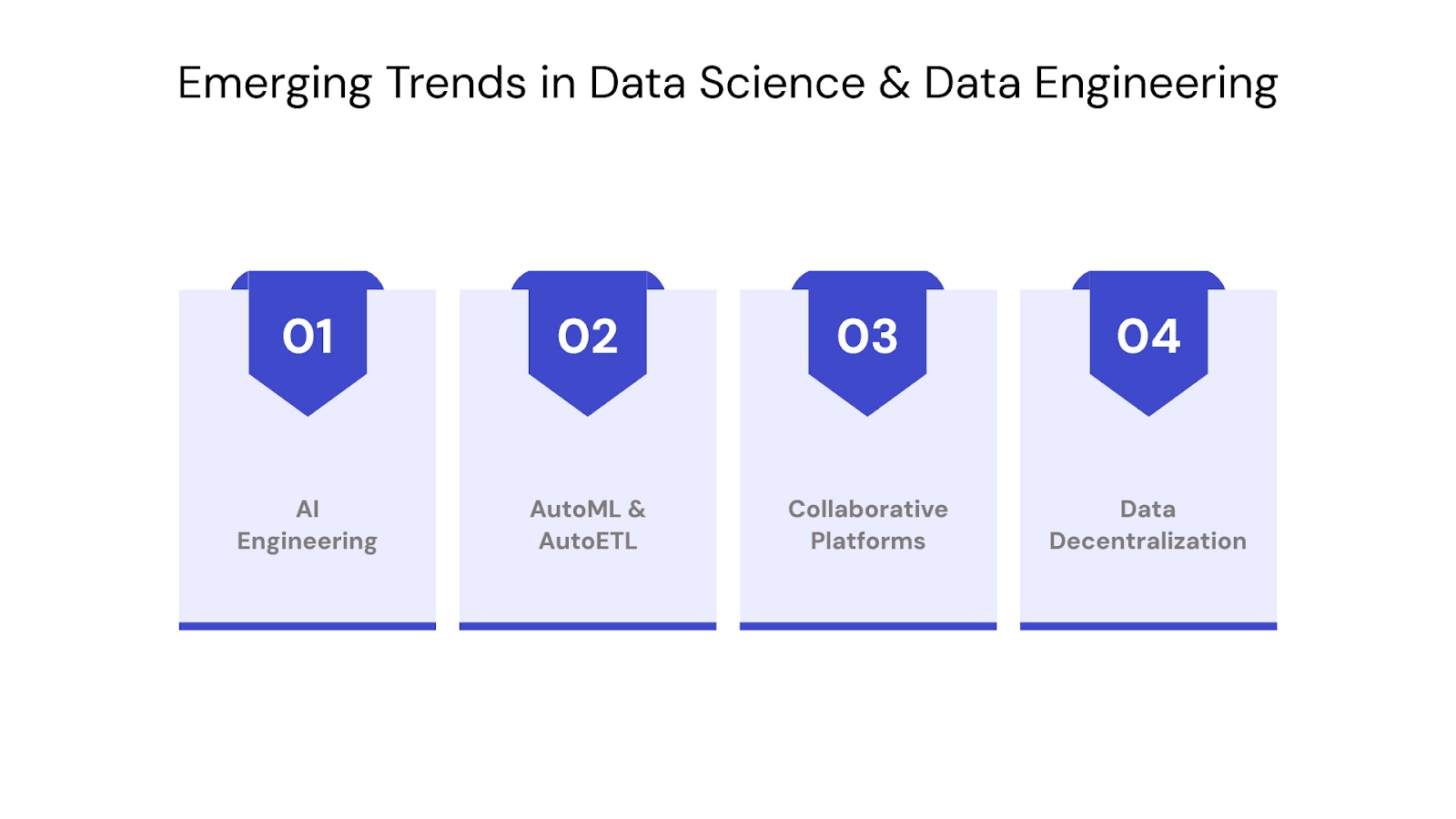

The landscape of data science and engineering is evolving fast, shaped by technological innovation, scalability demands, and the growing role of automation. Let’s look at some trends defining the future of these disciplines.

Modern data engineering is becoming more automated and intelligent. Tools powered by artificial intelligence now assist in:

This shift allows engineers to focus more on strategic architecture and less on repetitive tasks.

AutoML enables data scientists to build, train, and deploy models with minimal manual tuning. Similarly, AutoETL automates ingestion and transformation processes, improving pipeline efficiency and reducing human error.

These tools empower cross-functional teams to experiment faster and bring value to production more quickly.

Cloud-native data platforms (e.g., Snowflake, Databricks) are centralizing engineering and analytics efforts into shared workspaces, enabling seamless collaboration across departments.

These environments promote better governance, shared metadata, and more consistent outputs — crucial for aligning engineering and science teams.

Here’s how to choose the right cloud migration strategy to enable unified data platforms.

More organizations are adopting a data mesh architecture, where domain teams own their data pipelines and models. This decentralizes responsibility while encouraging data products to be treated as shared assets.

Interested in modern data architectures? Explore the differences between Data Mesh and Data Fabric.

Understanding the difference between data science vs data engineering is essential for any business or professional navigating the modern data landscape. These two disciplines are not rivals — they’re complementary forces. Data engineering lays the foundation by building the pipelines, architecture, and governance frameworks. Data science takes that foundation and unlocks its potential through modeling, pattern recognition, and insights that drive strategic action.

Organizations that successfully integrate these roles see measurable improvements in:

As businesses face increasingly complex data challenges, this synergy between engineering and science becomes indispensable.

Whether you're building an analytics platform from scratch or scaling an existing infrastructure, ensuring collaboration between data scientists and data engineers should be a top priority.

Build with Confidence — Partner with QuartileX

At QuartileX, we help you transform raw, siloed data into a powerful, analytics-ready engine. With deep expertise in both data science and data engineering, our teams can:

From foundational infrastructure to intelligent modeling, we partner with you at every step.

Explore our Data Engineering Services or book a consultation with our experts to get started.

Data engineering focuses on building and maintaining the infrastructure and pipelines that collect, store, and prepare data. Data science, on the other hand, analyzes that data to extract insights using statistical models, machine learning, and visualizations. Engineers enable access to high-quality data; scientists turn that data into actionable knowledge.

While data scientists don’t need deep engineering expertise, a working understanding of pipelines, data structures, and databases helps them collaborate effectively with engineers and handle data more independently during model development or experimentation.

Both roles are in high demand, but the rise of cloud-native platforms, real-time data, and AI integration has significantly increased the need for skilled data engineers. Many companies prioritize building a reliable data foundation before scaling advanced analytics.

Related read: Exploring the Fundamentals of Data Engineering: Lifecycle and Best Practices

In smaller teams or startups, a single professional may handle both tasks — often called a “full-stack data scientist.” However, in larger or enterprise environments, these roles are usually separated to allow deeper specialization and scalable workflows.

It depends on your strengths and interests. If you enjoy building systems, automating data flows, and solving infrastructure problems, data engineering may be a better fit. If you’re more interested in statistical analysis, experimentation, and drawing insights, consider data science. Both paths offer strong growth, salaries, and career opportunities.

From cloud to AI — we’ll help build the right roadmap.

Kickstart your journey with intelligent data, AI-driven strategies!