.jpg)

MLOps automation refers to automating every stage of the machine learning lifecycle—from data validation and model training to CI/CD and production monitoring. Without it, teams face inconsistent outputs, broken pipelines, and deployment delays. A 2025 survey found nearly 70% of AI practitioners have struggled to reproduce experiments across environments.

As real-time inference, containerized workflows, and cloud-native ML gain traction, automation is no longer optional. This guide covers core components, essential tools, pipeline design practices, and key implementation challenges.

MLOps automation refers to the use of tools and pipelines to manage machine learning workflows reliably and at scale. Instead of relying on manual scripts and fragmented processes, teams adopt automation to reduce errors, speed up deployment, and ensure reproducibility. This section explains the shift from manual to automated ML workflows, the core objectives driving this change, and where the practice is headed next.

Manual vs Automated Machine Learning Pipelines

Manual ML workflows involve disconnected scripts, ad hoc data handling, and hand-tuned deployment steps. These increase risk, slow delivery, and make reproducibility nearly impossible. In contrast, automated workflows use consistent pipelines, version-controlled assets, and scheduled triggers to ensure reliability across environments.

Here’s a comparison of key factors:

Example Scenario – Model Retraining

Want better model performance from the start? Learn how to structure, clean, and prepare your data for AI success. Discover the key steps that set strong foundations.

Key Objectives Driving MLOps Automation

The main goals of MLOps automation are:

These goals directly address business needs like faster time to market, improved model trust, and operational reliability. According to a 2025 survey, 70% of AI practitioners struggle with reproducibility—automation is a key step in solving that (source).

Where MLOps Automation Is Headed

MLOps automation is shifting toward increased standardization, broader tool interoperability, and the use of low-code interfaces for orchestration. Platforms like Vertex AI, Databricks, and Hugging Face AutoTrain now offer automated pipelines with minimal setup.

Generative AI is also influencing automation, enabling dynamic data augmentation, synthetic test generation, and even pipeline configuration based on natural language prompts.

While full automation remains complex, current trends show growing adoption of modular tools, reproducible pipelines, and built-in monitoring—paving the way for scalable ML operations.

Want to understand what powers generative AI agents behind the scenes? Explore the architecture that connects memory, planning, tools, and prompts. Learn how these components work together to create autonomous, task-driven systems.

Automating machine learning workflows requires more than scheduling scripts. It depends on tightly integrated systems for tracking, testing, and responding to model behavior. This section explains the foundational components that enable MLOps automation across development and production stages.

1. Versioning Everything: Code, Data, and Models

Automation depends on consistency. Tracking only code isn’t enough—model outputs depend on training data, parameters, and environment configurations. Without full version control, rollback and experiment comparison become unreliable.

Tools like Git manage code, DVC tracks data and pipelines, and model registries in MLflow or Weights & Biases store versioned models and metrics.

Example: A model trained last month starts underperforming. By checking the model registry, the team compares metrics from both versions. DVC shows the new training data had missing values. The pipeline is rolled back to the last stable version and retraining is triggered using clean data.

2. Automated Testing and Validation at Every Stage

Testing in MLOps covers more than software logic—it includes the integrity of data and model behavior under different conditions. Automated tests should be integrated across the pipeline to catch issues early.

Key test types include:

Scenario: A credit scoring model fails in production after an upstream data schema change. Manual testing missed the update. Automated validation would have caught the mismatch, blocked the deployment, and triggered alerts—preventing bad predictions.

3. Monitoring Models and Automating Retraining Loops

Monitoring is critical once a model is live. Key signals include input data drift, changes in prediction distributions, and latency spikes. Without these, teams won’t know when to retrain or intervene.

Tools like EvidentlyAI monitor drift and data quality. Prometheus and Grafana track performance and infrastructure metrics. Alerts and thresholds can trigger retraining pipelines in systems like Kubeflow or MLflow.

Example: A demand forecasting model sees rising error rates during a holiday season. EvidentlyAI flags a drift in sales data. A scheduled job kicks off model retraining using updated features and the new model version is pushed through CI/CD.

Curious how AI agents go from idea to production? Explore the full build-to-deploy process with practical tools and workflows. See what it takes to design, train, and launch AI agents that actually perform in the real world.

Automation in MLOps depends on specialized tools that handle pipeline execution, version tracking, and performance visibility. This section highlights key categories of tools that support repeatable, scalable, and transparent ML operations.

In machine learning, workflow orchestration automates the sequence of tasks—like preprocessing, training, validation, and deployment—across environments. CI/CD refers to integrating and deploying ML models through automated testing, validation, and release steps, triggered by events like new code, data, or model versions.

Popular orchestration tools:

CI/CD tools:

Example Use Case: A model training pipeline is triggered when new customer data arrives in S3. Airflow orchestrates data cleaning, feature extraction, and model training in Kubeflow. GitHub Actions runs integration tests and deploys the final model if metrics meet thresholds.

ML experiments often vary by parameters, datasets, and environments. Without proper tracking, reproducing results or understanding what worked becomes impossible. Experiment tracking tools centralize this process, helping teams compare runs, monitor performance, and version models systematically.

Key tools:

Features like version diffing, run comparisons, and interactive dashboards allow teams to make informed decisions and maintain transparency across workflows.

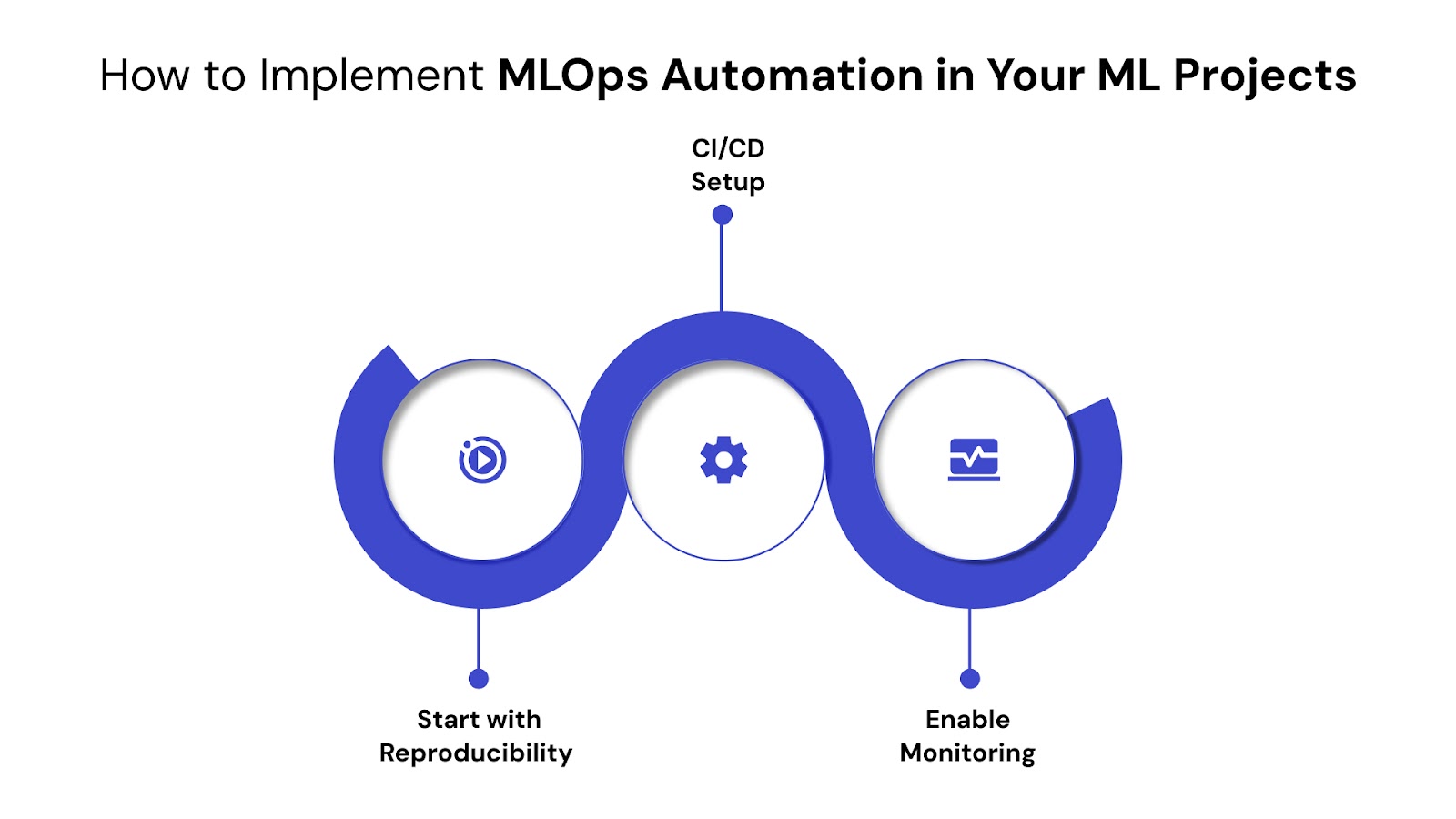

Building automated ML systems requires a structured approach—starting with reproducibility and ending with continuous delivery and monitoring. This section outlines practical steps to integrate MLOps automation into your workflows without overengineering.

Reproducibility is the foundation of MLOps. Without it, automated pipelines produce inconsistent results and make debugging difficult. Teams must standardize environments, control randomness, and track exact inputs for every model version.

Minimum requirements:

Beginner Checklist:

CI/CD in machine learning handles far more than code—it must validate data, retrain models, test performance, and manage staged rollouts. These pipelines ensure models are continuously production-ready.

Typical CI/CD flow:

Best Practices:

Monitoring ensures your automated systems don’t operate blindly. Post-deployment, you need real-time visibility into performance and data behavior.

What to track:

Set up manual reviews for critical thresholds and auto-retraining triggers for low-risk models. Automation must also serve audit needs—log predictions, inputs, model versions, and outcomes to meet internal and regulatory standards.

Struggling with fragmented data systems? Explore the tools and solutions built to unify, sync, and scale modern data integration. Find out what fits your stack and business needs.

While automation improves consistency and efficiency, it also introduces new challenges. Blindly scaling pipelines without planning for edge cases, tooling complexity, or ethical risks can break production systems. This section outlines three critical areas to manage when implementing MLOps automation.

1. Managing Data Drift and Model Performance Degradation

Data drift occurs when the input data distribution shifts from what the model was trained on. Model decay refers to performance decline over time, often due to changing user behavior or external factors.

Example: A fraud detection model trained on 2023 transaction patterns underperforms in 2025 due to changes in fraud tactics. The input features no longer reflect user behavior accurately.

Automation helps by detecting drift with tools like EvidentlyAI and triggering retraining. But human review is still required to validate if a retrain is appropriate or if feature engineering must change.

Retraining strategies include:

2. Tool Sprawl and Integration Complexity

Many MLOps stacks combine orchestration, monitoring, experiment tracking, CI/CD, and model serving tools—often from different vendors. Without standardization, this leads to tool sprawl, high maintenance, and fragile glue-code.

Solutions:

Unmanaged config sprawl—such as scattered YAML files or duplicated secrets—can lead to deployment errors and security risks.

3. Automation Without Oversight Leads to Risk

Automation does not replace the need for human judgment. In regulated, sensitive, or high-impact systems, approvals and reviews are essential to prevent harmful outcomes.

Scenarios needing human intervention:

Example: In 2020, a hiring algorithm used by a major tech company was automated end-to-end and deprioritized female candidates. Lack of human review delayed detection and caused reputational damage.

Build approval workflows, explainability tools, and ethical review gates into the automation pipeline to avoid such outcomes.

At QuartileX, we bridge the gap between machine learning experimentation and real-world enterprise deployment. Our MLOps solutions are built to scale with your business, combining robust automation, real-time monitoring, and end-to-end lifecycle support — so your models aren’t just developed, but consistently deliver value in production.

QuartileX doesn’t just help you build ML pipelines — we help you industrialize them. Whether you’re building your first model or managing hundreds across departments, our solutions are designed to grow with you.

MLOps automation helps eliminate manual errors, improve reproducibility, and accelerate deployment across machine learning workflows. To implement it effectively, start with environment consistency, use CI/CD for model lifecycle, and set up real-time monitoring. Choose tools that align with your team’s maturity and workflows. Regularly audit automated systems to ensure accuracy and accountability.

Many teams struggle with fragmented tools, inconsistent results, and scaling issues in production. QuartileX helps you design and deploy end-to-end MLOps automation frameworks tailored to your environment and goals. Our solutions reduce setup friction and ensure your ML systems are robust, traceable, and production-ready.

Get in touch with our data experts to assess your current ML infrastructure and build reliable automation pipelines that fit your business needs.

A: MLOps automation refers to automating the ML lifecycle—from data processing and model training to deployment and monitoring. It reduces manual effort, ensures reproducibility, and accelerates time to production. This is critical for scaling ML in enterprise environments.

A: It tracks code, data, and model versions using tools like Git, DVC, and MLflow. This makes it easy to replicate experiments or roll back when needed. Automated pipelines ensure consistency across environments.

A: Popular tools include Kubeflow and Airflow for orchestration, MLflow and Weights & Biases for tracking, and GitHub Actions or Jenkins for CI/CD. These tools help streamline and connect each stage of the ML pipeline. Tool choice depends on team size, infrastructure, and complexity.

A: Common issues include tool sprawl, configuration drift, and lack of monitoring. Integrating different tools and managing infrastructure-as-code adds complexity. Human oversight is also needed to avoid automation errors in high-risk use cases.

A: Use tools like EvidentlyAI, Prometheus, and Grafana to track performance metrics and detect drift. Set thresholds to trigger automated retraining pipelines. Combine with manual approvals for critical systems to ensure quality control.

From cloud to AI — we’ll help build the right roadmap.

Kickstart your journey with intelligent data, AI-driven strategies!