.png)

Is your enterprise struggling to turn its vast data reserves into actionable insights? With data volumes projected to reach 181 zettabytes by the end of 2025, many organizations find themselves overwhelmed, battling data silos, and facing inconsistent information. This challenge directly impacts decision-making, as poor data quality alone costs organizations an average of $12.9 million annually, according to Gartner.

The solution lies in data orchestration. This blog will illustrate how intelligent data orchestration delivers real-time insights, fuels AI initiatives, and provides the scalable foundation necessary for sustained enterprise growth and competitive advantage.

Data orchestration is the systematic automation and coordination of your enterprise's entire data pipeline. This encompasses everything from initial data ingestion and transformation to its delivery and activation for analytics, machine learning, and operational use. It's the control plane that ensures data assets are consistently available, accurate, and ready for critical business applications.

Data integration primarily involves connecting disparate data sources and moving data between them, establishing the basic data pathways. Data orchestration, however, provides the intelligent layer above this. It governs the entire workflow, encompassing the precise sequencing, scheduling, dependency management, and real-time monitoring of these integrations and all subsequent data processing steps.

This includes robust error handling, automated retries, and continuous quality checks, ensuring data not only flows but flows correctly, efficiently, and with purpose across your entire data ecosystem.

For the modern enterprise, integration is a necessary component, but orchestration is the strategic imperative that transforms raw data connections into a controlled, optimized, and resilient data supply chain.

Also read: Tips on Building and Designing Scalable Data Pipelines

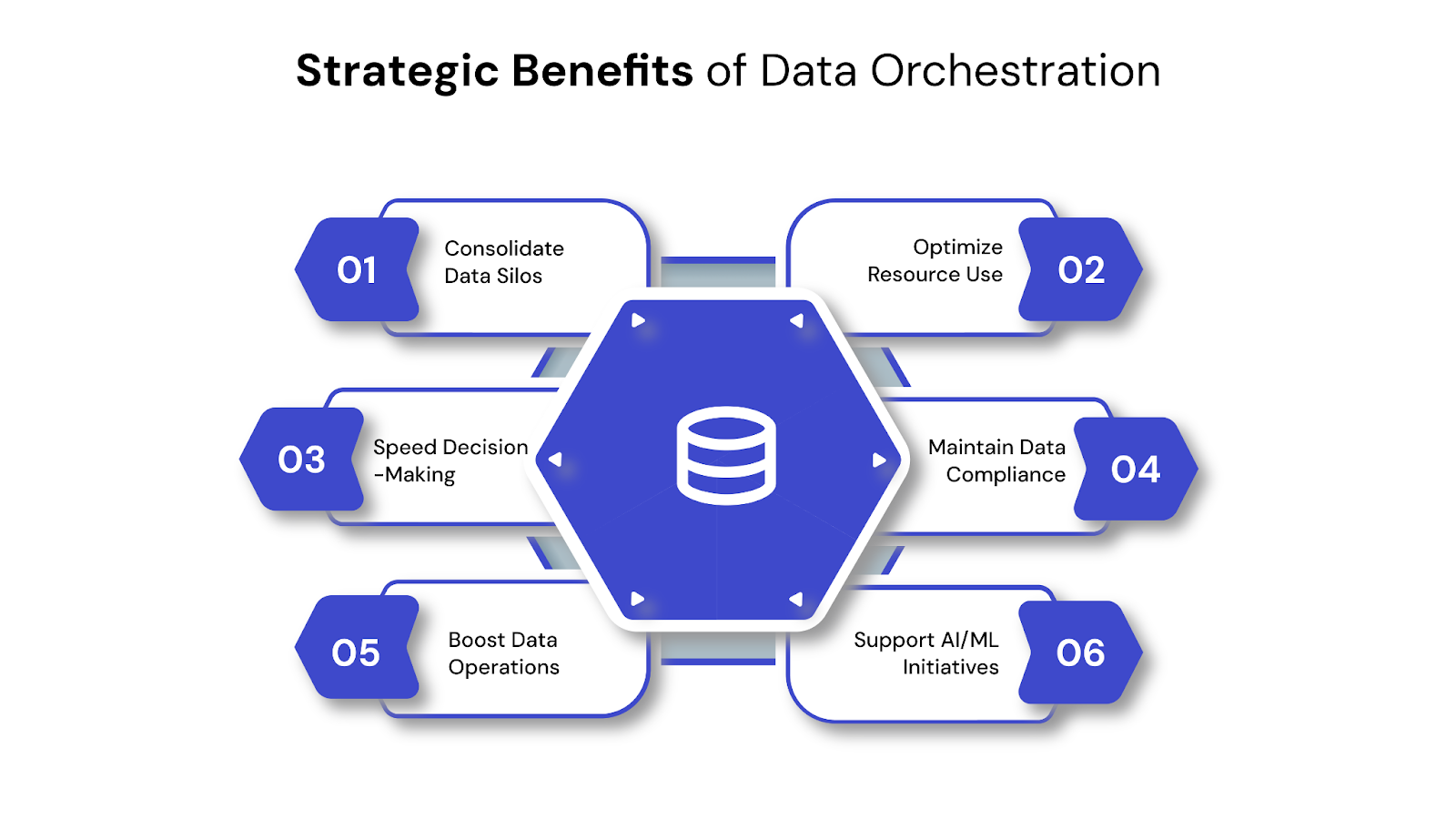

Implementing robust data orchestration isn't merely an operational improvement; it's a strategic investment that fundamentally shifts how your organization utilizes its most critical asset: data. Here’s how a well-orchestrated data ecosystem delivers tangible competitive advantages:

This strategic alignment of data, processes, and technology through orchestration is no longer a luxury but a fundamental requirement for enterprises aiming to secure a competitive edge and drive sustainable growth in a data-saturated landscape.

Effective data orchestration transforms chaotic data flows into a streamlined, high-performance asset. This isn't a nebulous concept; it's a meticulously engineered process, typically broken down into three interconnected phases. Understanding these operational steps is key to building a resilient data pipeline that empowers your enterprise.

This initial phase is about strategically acquiring and consolidating data from every relevant source across your complex enterprise landscape. It's where the raw material enters your data supply chain, ready for refinement.

Once ingested, raw data is rarely fit for direct consumption. This critical phase involves meticulous processing, cleansing, and enhancement to convert raw bits into actionable business intelligence.

The final, decisive phase ensures that your processed, high-quality data is readily accessible and consumable by the systems, applications, and users who need it to drive direct business actions. This is where data truly translates into value.

This structured, automated approach is how data orchestration transforms raw data into a reliable, actionable asset that consistently powers your enterprise.

The value of data orchestration is not theoretical; it's directly measurable. It translates into tangible improvements across operations, decision-making, and competitive advantage. Enterprises using mature orchestration capabilities consistently report accelerated insights, reduced operational costs, and enhanced agility.

Here's how robust data orchestration delivers strategic advantages and real-world impact:

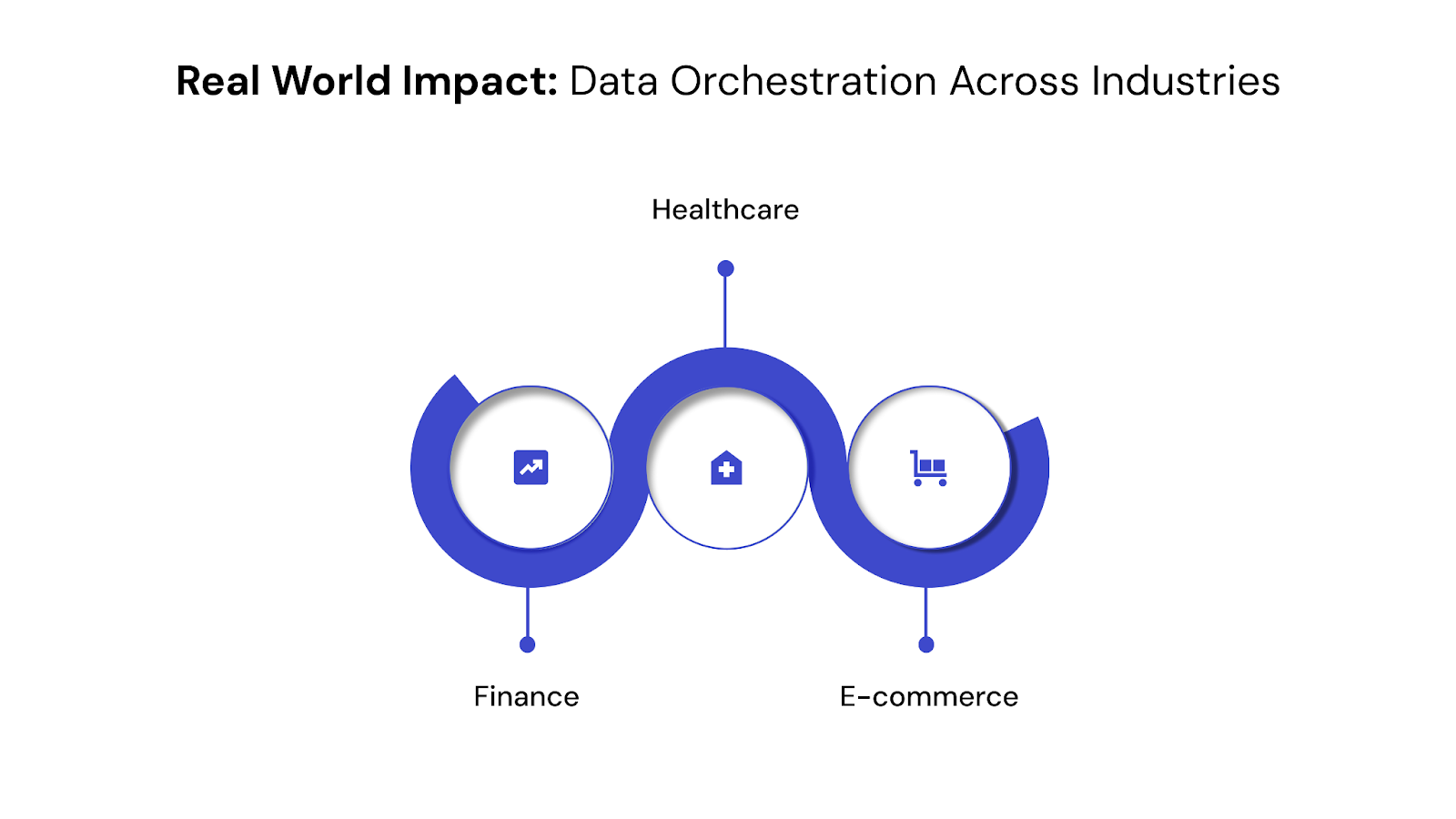

A major investment firm faced persistent challenges with regulatory compliance reporting, struggling with the manual aggregation and validation of vast, siloed data from disparate trading platforms and risk systems. This led to delays and increased the risk of non-compliance.

By implementing data orchestration, the firm automated the real-time aggregation and validation of transactional data, ensuring immediate access to accurate, audit-ready information. This enabled a significant reduction in the time and effort spent on compliance cycles, directly addressing the fact that poor data quality costs financial institutions an average of $15 million annually in regulatory fines and penalties.

A large hospital network struggled with delayed diagnoses and inefficient patient care due to fragmented patient records spread across EHRs, lab systems, and specialist clinics. This often resulted in redundant tests and incomplete patient histories for clinicians.

Data orchestration unified these disparate data sources into a single, comprehensive patient view, accessible in real-time by care providers. This immediate access to holistic patient histories improves diagnostic accuracy and reduces redundant tests, contributing to the estimated $30 billion annually that the U.S. health system loses due to a lack of healthcare data interoperability.

An online retail giant struggled to deliver truly personalized customer experiences due to fragmented real-time Browse data and static customer profiles. This led to missed sales opportunities and lower customer engagement.

By orchestrating real-time streams of Browse activity, purchase history, and preference data, the retailer enabled dynamic content and personalized product recommendations at scale. This resulted in a significant increase in conversion rates, with many brands reporting that personalization can boost e-commerce conversion rates by 10-15% and increase customer satisfaction.

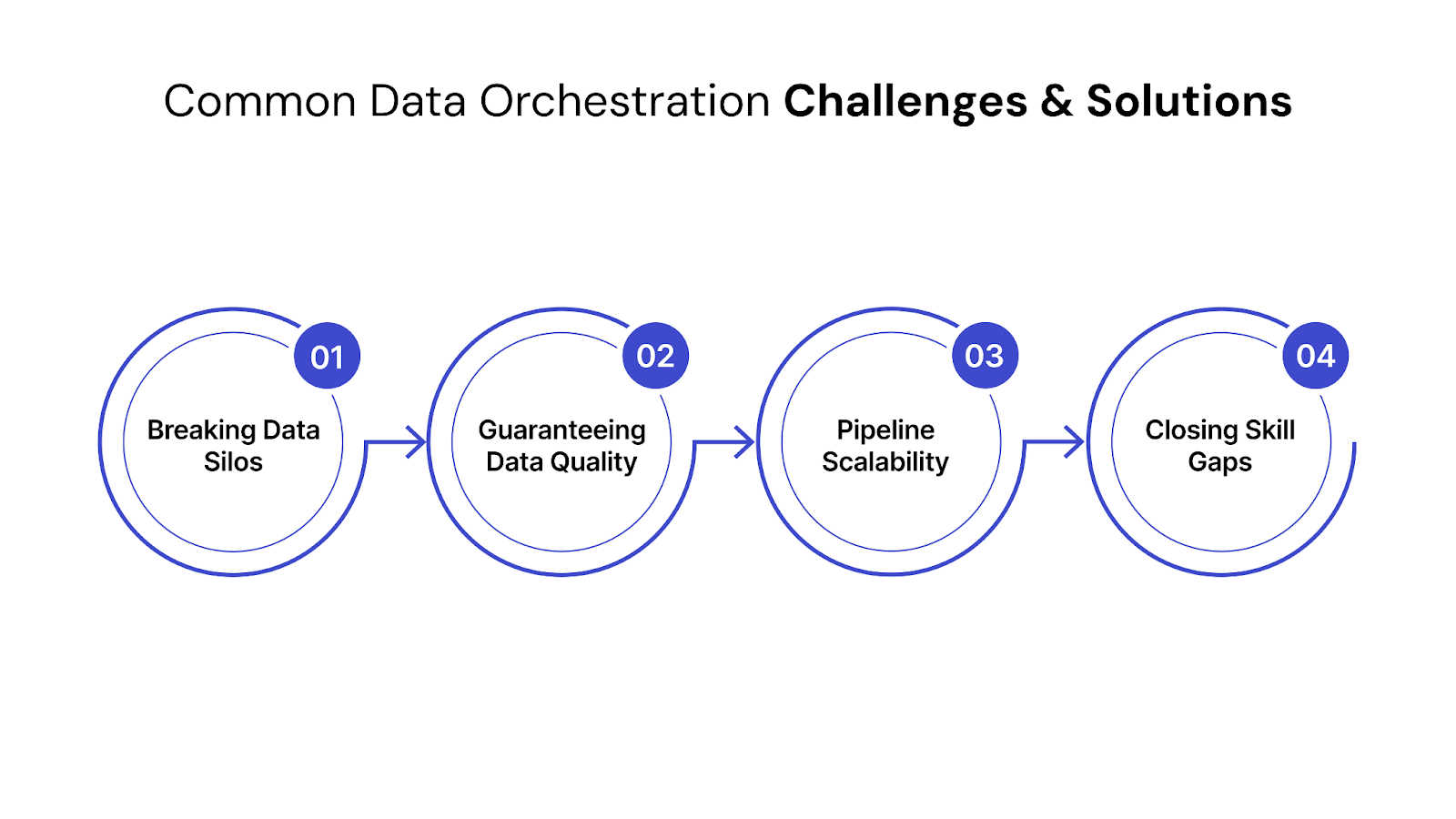

Implementing and scaling data orchestration within a complex enterprise environment presents distinct challenges. Recognizing these obstacles upfront and addressing them with strategic foresight is crucial for maximizing your data's potential and avoiding common pitfalls.

Data often resides in isolated systems with varying formats and schemas, preventing a unified view and hindering cross-functional insights. This fragmentation creates significant manual effort for data consolidation.

The Strategic Solution: Implement a centralized orchestration layer with robust connectors to unify disparate data sources into a single, accessible data fabric. This breaks down silos, enabling consistent data availability across the entire enterprise.

Inaccurate, incomplete, or inconsistent data propagating through pipelines undermines analysis, erodes trust in insights, and can lead to costly errors or non-compliance. Manual quality checks are unsustainable at enterprise scale.

The Strategic Solution: Embed automated data quality validation and cleansing directly into orchestration workflows, with continuous monitoring and alerts. This proactive approach ensures that only high-quality, trustworthy data fuels downstream systems and critical decisions.

To dive deeper into building trust in your data assets, explore our comprehensive guide on Mastering Data Governance in 2025.

As data volumes, velocity, and the number of data sources grow, managing intricate data pipelines becomes exponentially complex. Manual orchestration is prone to errors, bottlenecks, and makes scaling operations incredibly difficult.

The Strategic Solution: Adopt an intelligent, extensible orchestration platform featuring visual builders and automated scheduling for efficient pipeline design and scaling. Prioritize fault tolerance and automated recovery to ensure pipeline resilience under surging data demands.

The specialized expertise required for building, maintaining, and optimizing complex data pipelines often creates skill gaps within internal teams, increasing reliance on a few experts and slowing down development.

The Strategic Solution: Invest in intuitive orchestration platforms that empower diverse data professionals and consider strategic partnerships for specialized expertise. This augments internal capabilities, accelerating new initiatives and reducing operational burden.

The data landscape is in constant flux, driven by escalating data volumes, new technological paradigms, and evolving business demands. For data leaders, staying ahead of key trends in data orchestration isn't just about curiosity; it's about future-proofing your data strategy and ensuring your enterprise remains agile and innovative.

1. AI/ML-Driven Orchestration

AI and Machine Learning are increasingly being embedded directly into orchestration platforms. This means workflows are becoming self-optimizing, enabling automated anomaly detection, intelligent resource allocation, and predictive failure analysis. For data leaders, this translates into significantly reduced manual oversight, improved efficiency, and enhanced resilience of data systems, allowing proactive issue resolution.

To explore how advanced intelligence can transform your operations, read our guide on Successful Generative AI Deployment Strategies.

2. Data Observability as a Core Pillar

Beyond basic monitoring, data observability focuses on understanding the health and reliability of data throughout its entire lifecycle. It provides comprehensive visibility into data quality, freshness, and lineage, ensuring data trust. Integrating robust observability into your orchestration framework allows proactive identification of data quality issues, shortens incident resolution times, and provides a clear audit trail for compliance and data integrity.

3. Cloud-Native and Hybrid Orchestration

Enterprises are increasingly adopting multi-cloud and hybrid cloud environments. Orchestration solutions are evolving to utilize cloud services and seamlessly manage complex data flows spanning diverse cloud providers and on-premises infrastructure. This trend offers unparalleled scalability, flexibility, and cost efficiency, providing a unified control plane for your entire data ecosystem and reducing infrastructure management overhead.

4. Data Mesh Principles and Decentralized Ownership

The "Data Mesh" architecture advocates for decentralized data ownership, treating data as a product managed by domain-oriented teams. This shifts the orchestration paradigm from a centralized pipeline model to one that supports distributed data products. For leaders, this empowers business units with greater agility, fosters data product discovery across independent domains, and ensures global governance policies are consistently applied across a distributed data landscape.

Selecting the right data orchestration partner is a strategic decision, extending beyond just technology. It's about securing expertise that directly translates your data strategy into tangible business outcomes. QuartileX stands apart as that strategic ally, specifically engineered to navigate and empower complex enterprise data landscapes.

What sets QuartileX apart as your data orchestration partner:

We unlock the full potential of your business data through a smart, strategic, and scalable approach. This expert-led methodology guides you comprehensively from initial assessment through to implementation and ongoing optimization, ensuring lasting business growth.

The era of merely collecting data is over. Today, competitive advantage hinges on an organization's ability to seamlessly orchestrate, transform, and activate its vast data assets into timely, trusted intelligence. Businesses can no longer afford fragmented insights or reactive processes; the future demands a unified, intelligent data flow that drives every strategic decision and customer interaction.

It's time for businesses to critically re-evaluate their data orchestration strategy. Are your current processes truly unlocking your data's full potential, or are they bottlenecks to innovation?

Partner with QuartileX to rethink, optimize, and future-proof your data orchestration framework. Visit our website to schedule a consultation and transform your data into your most powerful strategic engine.

While traditional ETL (Extract, Transform, Load) focuses on moving and preparing data for a destination, data orchestration encompasses a broader scope. It's about automating, monitoring, and managing complex, multi-step data workflows across diverse systems and applications to ensure timely, high-quality data delivery for various business needs, often involving real-time scenarios and advanced analytics.

Absolutely. Data orchestration is critical for regulatory compliance. By automating data lineage tracking, enforcing data quality rules, and ensuring consistent data handling across all systems, it provides the necessary visibility and control to demonstrate adherence to privacy laws and internal governance policies.

While large enterprises certainly benefit immensely, data orchestration is becoming increasingly vital for businesses of all sizes. Even with moderate data volumes, managing fragmented data, ensuring quality, and automating workflows for timely insights can be complex. Orchestration platforms provide scalability and efficiency that are beneficial regardless of initial data scale.

AI/ML models are highly dependent on clean, consistent, and timely data. Data orchestration ensures that these models are continuously fed with high-quality, relevant data by automating collection, transformation, and validation processes. This operationalizes AI, turning theoretical models into practical, impactful business tools.

Common challenges include overcoming existing data silos, ensuring end-to-end data quality, managing the complexity of diverse data sources and transformation logic, and addressing internal skill gaps. A strategic partner like QuartileX helps navigate these challenges by providing expertise and a robust, adaptable framework.

From cloud to AI — we’ll help build the right roadmap.

Kickstart your journey with intelligent data, AI-driven strategies!