Data migration tools are software used to securely transfer data between systems, commonly during cloud adoption, app modernization, or infrastructure upgrades. As over 50% of enterprise workloads now run in the cloud, choosing the right tools is critical to avoid data loss, downtime, or compliance risks.

This blog outlines what data migration tools do, compares leading platforms, and offers actionable data migration resources for cloud, database, and real-time system use cases. It’s tailored for professionals managing high-stakes migrations in sectors like finance, healthcare, and SaaS.

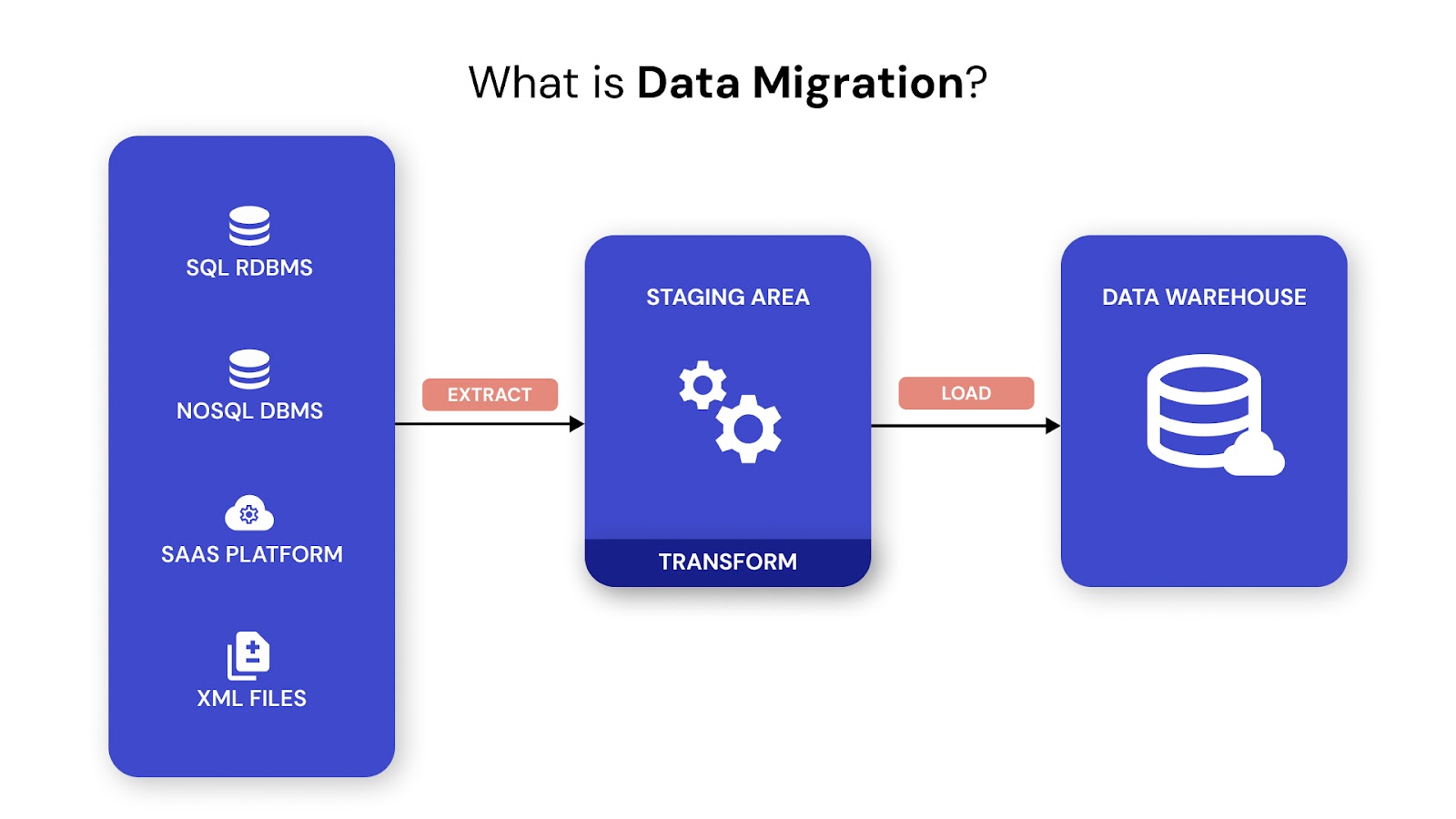

Data migration tools are software solutions that help move data between storage types, formats, or systems while ensuring consistency, accuracy, and minimal disruption. These tools automate mapping, transformation, validation, and transfer processes, allowing teams to handle complex migrations faster and with less risk. They’re especially useful during cloud adoption, system upgrades, database consolidation, or platform transitions.

Why Are Data Migration Tools Needed?

Planning a broader system upgrade? Learn how to simplify software migration with clear strategies, tool tips, and zero-downtime planning in our complete 2025 guide.

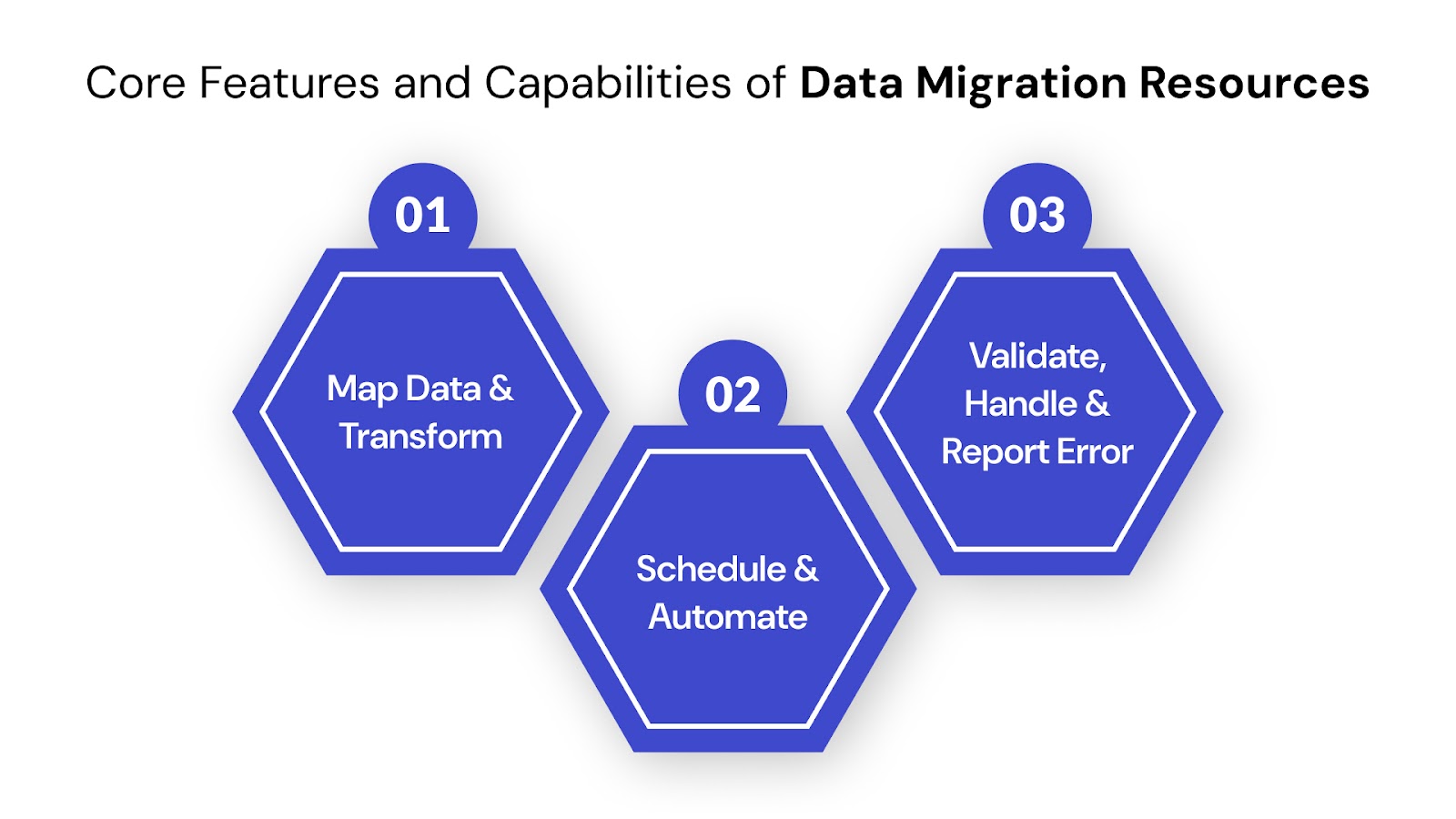

Data migration resources offer a range of functionalities designed to make data transfers secure, fast, and consistent—especially in high-risk environments like healthcare, finance, and SaaS. Below are the core capabilities you should look for when evaluating any migration tool or resource:

1. Data Mapping and Transformation

Data mapping ensures that data from the source system correctly aligns with the target schema. Most tools support transformation logic (e.g., field renaming, format conversion) to ensure compatibility between different systems or applications.

Example: Mapping a “customer_id” from a legacy database to “user_id” in a cloud-native app, while transforming date formats from MM/DD/YYYY to ISO standard.

2. Scheduling and Automation

Scheduling capabilities allow teams to plan data transfers during off-peak hours or maintenance windows, minimizing operational disruption. Automation workflows help eliminate repetitive manual tasks and reduce the risk of human error.

Example: Automating nightly ETL runs to sync on-premise databases with cloud data lakes.

3. Validation, Error Handling, and Reporting

Top-tier tools validate data integrity before, during, and after migration. They also provide logs, alerts, and rollback options for error resolution. Comprehensive reporting helps teams stay compliant and audit-ready.

Example: Automatic checksum validation and alert generation if row counts mismatch between source and target.

To apply these capabilities effectively, it's crucial to choose the right type of migration tool based on your project’s complexity.

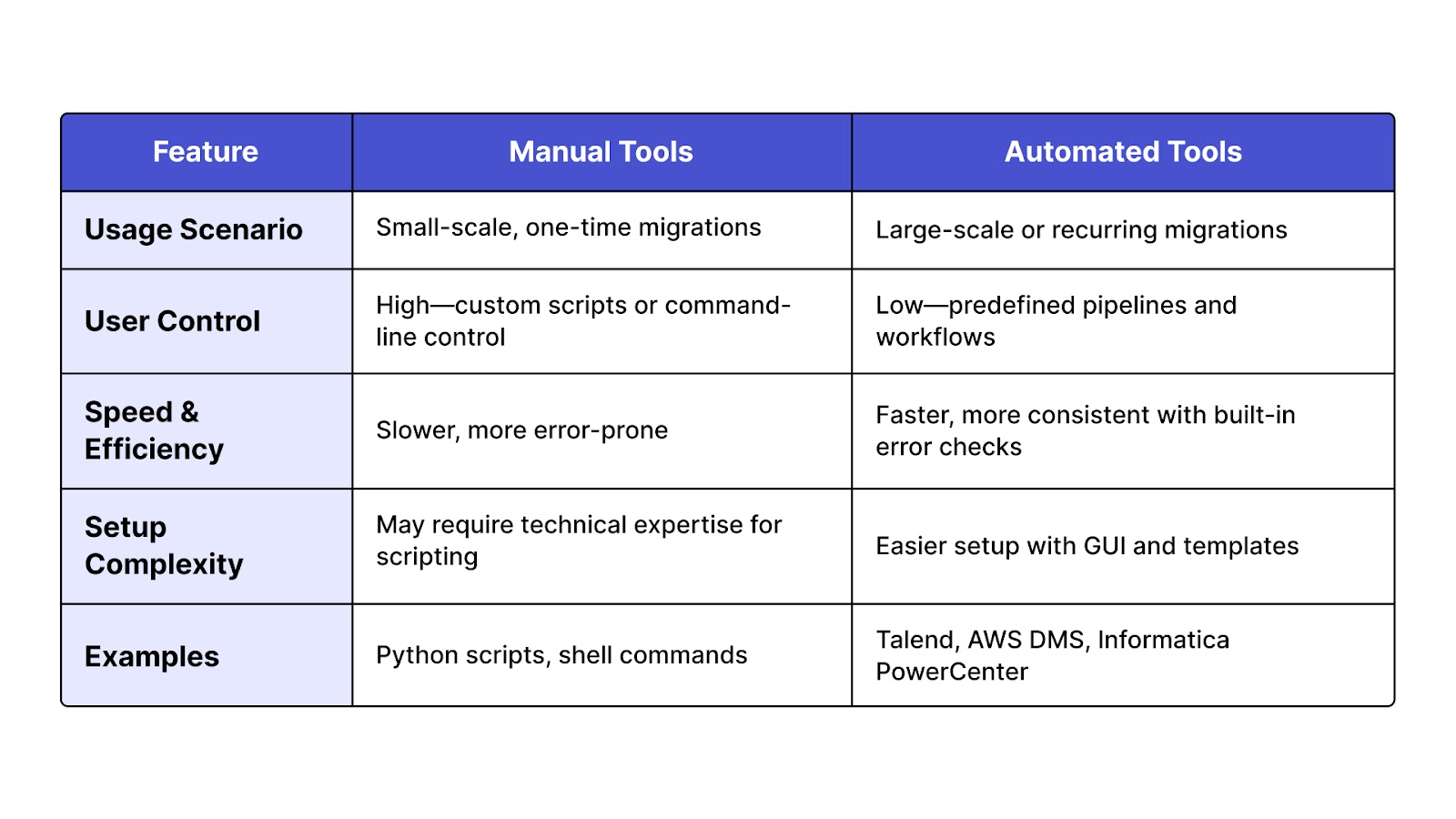

Not all migrations require the same level of automation. Choosing between manual and automated tools depends on the complexity, scale, and repeatability of your migration workflow.

Manual tools offer deep customization but require significant effort and technical expertise. Automated tools, on the other hand, streamline operations and reduce the risk of error through predefined workflows.

Beyond tool selection, understanding the nature of your data—whether structured or unstructured—is key to ensuring a successful migration.

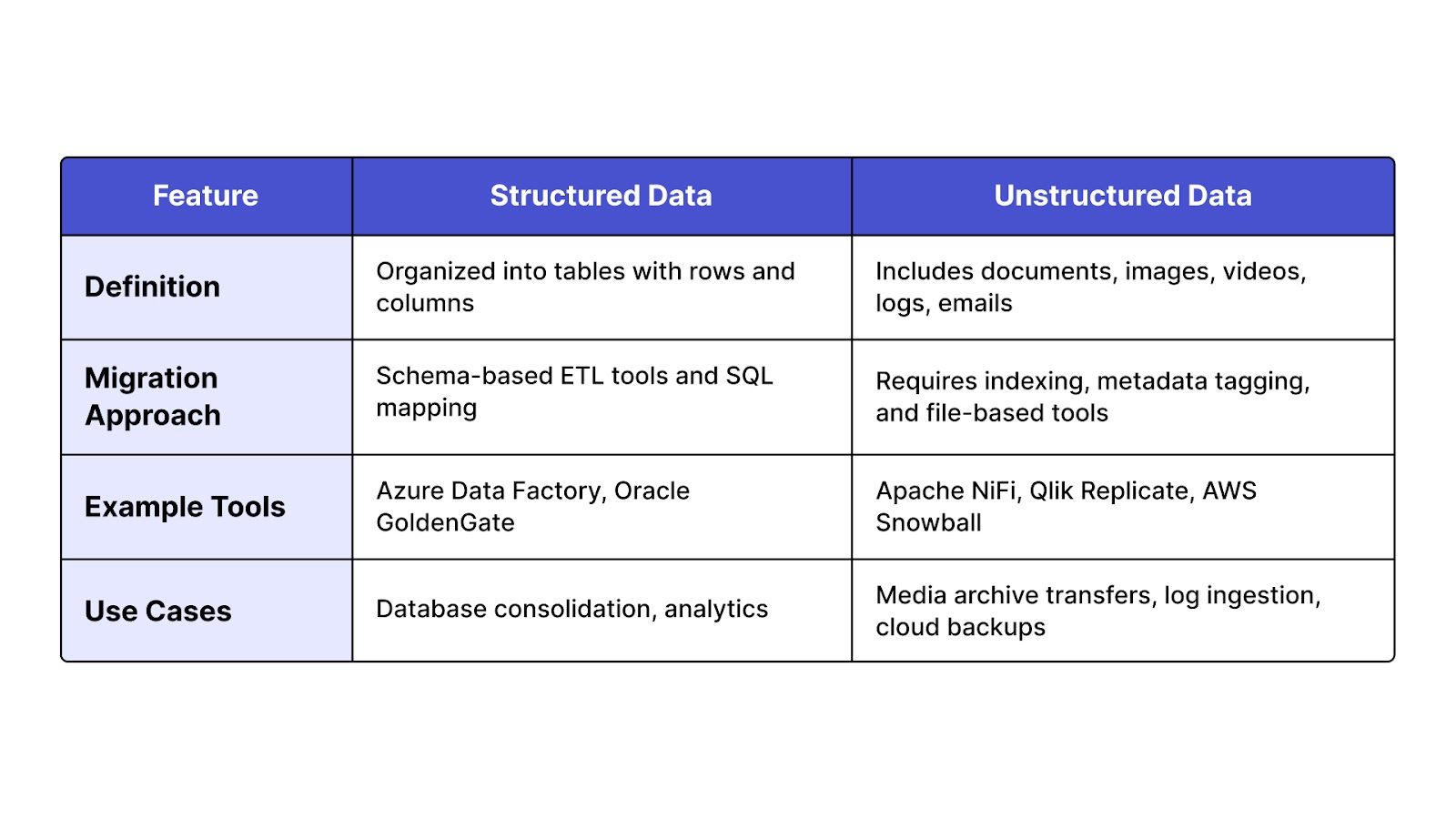

Data formats vary dramatically—and so must your migration tools. The effectiveness of a migration depends on how well your tools handle structured datasets versus unstructured files.

Structured data follows predefined schemas, while unstructured data requires flexible processing methods, indexing, and tagging for successful migration.

Data migration is a high-stakes process. A single misstep can lead to data corruption, extended downtime, or regulatory violations. Access to accurate and well-structured resources enables teams to reduce these risks through careful planning, automation, and compliance checks.

This is especially critical in regulated sectors such as healthcare, finance, and government, where data must remain secure, traceable, and compliant with frameworks like HIPAA, GDPR, or RBI mandates. Without proper guidance and documentation, teams often struggle with tool misconfiguration, data loss, or mismatched schemas.

Official documentation portals offer step-by-step guidance, best practices, troubleshooting tips, and architecture diagrams. These are often the first and most reliable source of truth for platform-specific migrations.

Open-source tools provide flexibility, community support, and transparency for teams that require customizable solutions. These are ideal for teams looking to avoid vendor lock-in or for those with hybrid or unconventional setups.

These official guides offer tool-specific instructions tailored for each vendor's ecosystem. They often include architecture diagrams, configuration templates, and sample workflows.

When official docs fall short, developer communities and expert networks fill the gap with real-world solutions, troubleshooting tips, and peer-reviewed answers.

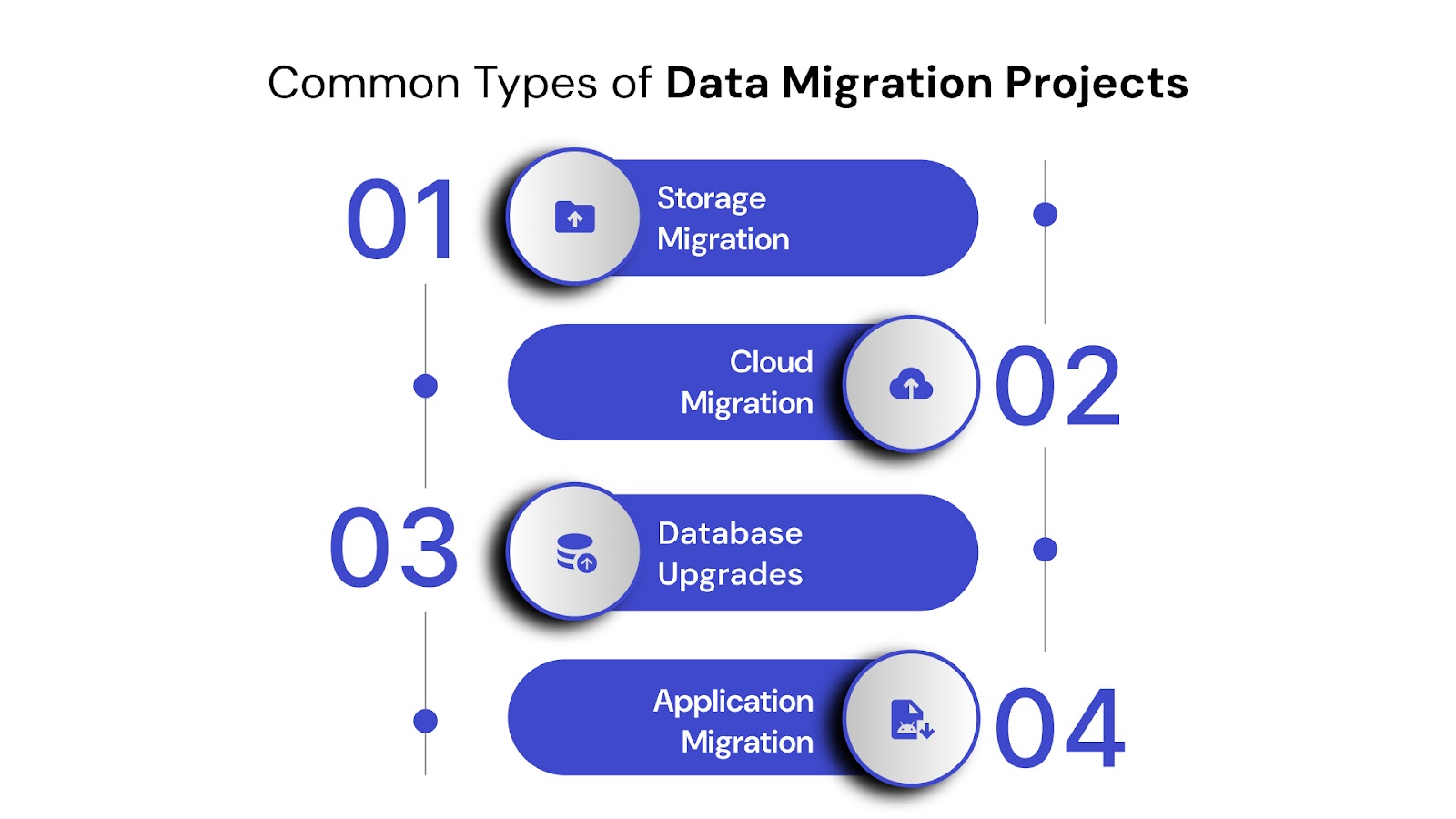

Data migration isn’t a one-size-fits-all process. Each migration type comes with its own set of challenges, goals, and tooling requirements. Understanding the differences can help teams plan better and choose the most suitable migration strategy.

1. Storage Migration

This involves moving data from one physical storage system to another—typically for better performance, cost efficiency, or capacity. Common in hardware upgrades or when transitioning from on-premise to network-attached storage (NAS) or storage area networks (SAN).

Key considerations: Data integrity, downtime, and access permissions.

Use case: Migrating from legacy hard drives to a high-speed SSD-based NAS.

2. Cloud Migration

Cloud migration refers to transferring digital assets—such as files, databases, or entire application environments—from on-premise systems to cloud platforms like AWS, Azure, or Google Cloud.

Goals: Scalability, remote accessibility, disaster recovery, and reduced infrastructure overhead.

Use case: Moving an on-premise CRM to a cloud-based SaaS alternative.

3. Database Upgrades and Platform Switching

This includes both upgrading an existing database (e.g., SQL Server 2014 to SQL Server 2022) and switching to a different database platform (e.g., Oracle to PostgreSQL). These migrations often involve schema conversion, data type mapping, and compatibility testing.

Key risk: Data corruption or loss during transformation.

Use case: Replacing a proprietary RDBMS with an open-source alternative for licensing flexibility.

4. Application Migration

Involves shifting applications from one environment to another, such as from on-premise to cloud, or between cloud providers. This type may include re-platforming, re-hosting, or full re-architecting of the application.

Challenges: Dependency mapping, API compatibility, and performance optimization.

Use case: Migrating a legacy monolithic ERP system to a containerized microservices-based architecture in the cloud.

Migrating data is just the start. Discover how to turn that data into business impact with a scalable, analytics strategy built for growth and alignment.

Data migration is rarely straightforward. Even with top-tier tools, projects can fail if teams overlook the key challenges that accompany system transitions. This is where the right resources—guides, documentation, templates, and expert support—become essential to reduce risk and ensure success.

1. Risk of Data Loss During Transfer

When moving large volumes of critical data, there's always a risk of corruption, truncation, or incomplete transfer. Without validation scripts, transformation logs, and checksum comparisons, teams might not even realize what's missing until it's too late.

How resources help: Official documentation often includes pre-migration checklists and post-transfer validation techniques that safeguard data integrity.

2. Downtime and Business Disruption

Unplanned downtime during migration can halt operations, impact customer experience, and lead to financial losses—especially in 24/7 systems like banking, logistics, or healthcare.

How resources help: Scheduling tools, rollback strategies, and workload balancing best practices (often documented in vendor guides) help minimize impact and maintain continuity.

3. Compatibility Across Systems and Formats

Different systems may store and structure data in unique ways. For example, a column in one database may not have an equivalent in another, or file encodings may clash. These mismatches can cause critical failures during mapping or transformation.

How resources help: Community forums and tool-specific compatibility matrices guide users on schema conversion, format normalization, and workarounds for edge cases.

4. Compliance and Audit Requirements (GDPR, HIPAA)

Industries handling sensitive data must follow strict regulations during migration. Poor encryption, insecure transfer protocols, or missing logs can lead to non-compliance—and hefty penalties.

How resources help: Vendor-specific documentation and compliance checklists help teams align with legal standards like GDPR, HIPAA, or PCI-DSS. They also provide guidance on setting up audit trails and access controls.

Worried about data loss during migration? Don’t leave your critical assets to chance—check out our expert-backed guide on minimizing risk and preserving data integrity during complex database transfers.

Selecting the right data migration tool isn't just about features—it’s about context. The right choice depends on your organization’s data volume, technical expertise, compliance needs, and whether you're executing a one-time migration or setting up continuous sync. Use the table below to compare key tool categories and evaluation factors before making a decision.

By thoroughly assessing these factors and including them in the migration plan, you can develop a realistic budget that creates a smoother transition.

For mid-sized companies and SMBs, it’s essential to choose a migration tool that balances performance and affordability.

QuartileX is one such platform that offers solutions that simplify the migration process while saving you time and money.

QuartileX’s Data Migration is an AI-powered platform that offers businesses a comprehensive suite of data migration services. It is designed to ensure smooth, secure, and efficient migrations.

Their approach stands out through customized solutions that address the unique client needs like data, analytics, and Gen-AI objectives. Whether you're upgrading legacy systems or switching to new platforms, it can scale effortlessly as needs evolve.

Key Features of QuartileX’s Data Migration Services:

By focusing on minimizing risks and maximizing the potential of new systems, QuartileX helps businesses harness the full value of their data, creating a solid foundation for future growth.

Explore QuartileX’s tailored services and see how their proven solutions can help your business successfully transition to new systems.

Still having doubts about optimizing your data migration resources? Reach out to QuartileX's experts now!

When it comes to data migration, the difference between success and disruption often lies in your tools and preparation. Choosing the right migration resources ensures accuracy, security, compliance, and business continuity—especially when dealing with critical systems or sensitive data.

Vendor documentation, expert forums, and community-tested frameworks can make your migration faster, smoother, and far less risky.

Your 4-Step Action Plan: From Planning to Execution

1. Audit:

Start by mapping your current data landscape. Identify formats, dependencies, compliance needs, and downtime constraints.

2. Shortlist:

Evaluate tools that align with your project scale, migration type (cloud, hybrid, storage), and regulatory requirements.

3. Consult:

Use official vendor documentation, compatibility matrices, and migration guides to refine your tool selection and setup plan.

4. Test:

Run trial migrations in sandbox or staging environments to validate data integrity, performance, and rollback procedures before going live.

Ready to streamline your next migration? Choose your tools wisely. Use your resources fully. And test before you move. By selecting a reliable and cost-effective tool like QuartileX, businesses can ensure a smooth transition to new systems with minimal risk. Talk To a Data Expert Now!

A: Key factors include data volume, migration frequency (one-time vs. recurring), source/target compatibility, regulatory requirements, and available team expertise. Cloud-native tools like AWS DMS work well for scalable, low-downtime migrations. On-premise tools such as Informatica are ideal for secure, high-control environments. Always evaluate cost, rollback features, and support for structured/unstructured data.

A: Tools like Informatica and Azure Migrate include encryption, audit logging, and data masking to meet GDPR, HIPAA, or industry-specific compliance standards. They also support role-based access control and error tracking to maintain data integrity. Compliance checklists and validation logs from vendor documentation further reduce legal and operational risks. Using sandbox environments can also help test and document compliance before deployment.

A: Open-source tools like Apache NiFi or Talend Open Studio are ideal when you need high customization, want to avoid vendor lock-in, or are operating under budget constraints. They're well-suited for experimental projects, hybrid environments, or teams with strong internal development resources. However, they may lack enterprise support, compliance guarantees, or advanced automation features found in premium platforms.

A: Even the best tools struggle if teams lack pre-migration audits, documentation, or a fallback plan. Common issues include schema mismatches, API incompatibilities, permission errors, and downtime miscalculations. Resources like migration guides, staging checklists, and expert forums offer real-world solutions that tools alone can’t address. Combining tools with reliable resources ensures a safer and faster transition.

A: Start by selecting tools like Fivetran or Qlik Replicate that support continuous sync and real-time pipelines. Ensure your architecture allows low-latency connections across clouds or between on-premise and cloud systems. Use documentation from platforms like AWS and Azure for setting best practices, and validate pipelines in staging environments. Monitor live migrations with automated alerts, and always have rollback procedures in place.

From cloud to AI — we’ll help build the right roadmap.

Kickstart your journey with intelligent data, AI-driven strategies!